In this episode, Thomas Betts talks with Kurt Bittner and Pierre Pureur about continuous architecture. The discussion covers the role of a software architect, the importance of documenting architectural decisions, and why you need a minimum viable architecture for any minimum viable product.

Key Takeaways

- Architectural decisions are different from other design decisions because they are exponentially more difficult to reverse.

- Capture “why” decisions were made using architecture decision records, ADRs. Store your ADRs as close to the code as possible, where they are more likely to be maintained.

- Quality attribute requirements, QARs, define the architectural requirements of a system. Question the QARs, because they may not be correct, and find ways to test them.

- Minimum-viable products, MVPs, are not only for startups. Every measurable product increment that adds value to the user is an MVP.

- Every MVP has a corresponding MVA, the minimum-viable architecture. The MVA and MVP should remain close together as they evolve.

Subscribe on:

Transcript

Intro

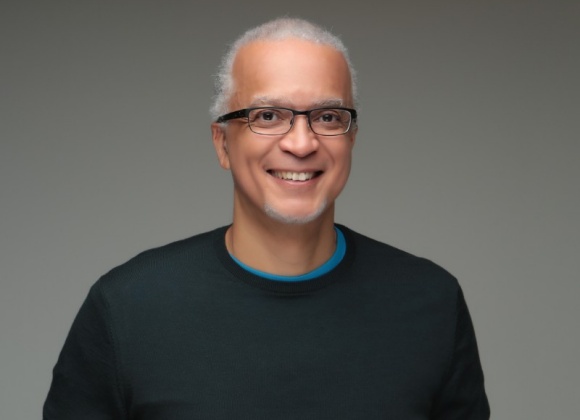

Thomas Betts: Hello, and welcome to another episode of the InfoQ Podcast. I'm Thomas Betts, and today I'm joined by Kurt Bittner and Pierre Pureur. Kurt and Pierre have co-written several articles for InfoQ, beginning with a seven-part series on Continuous Architecture. And lately, they've been exploring the concept of a minimum viable architecture. I personally find the situations they describe very relatable, and I found many of their ideas are influencing how I perform my role as a software architect and engineer.

So, welcome to the podcast. Could you both please tell us a little bit about yourself? Kurt, would you like to go first?

Kurt Bittner: I began my career a scary number of years ago, because it goes fast. And what I do, I work for Scrum.org, but what I do is focus on enterprise agile issues a lot. And that usually has most people saying, "Well, does that have to do with architecture?" But my roots are in software development and then evolved into software architecture.

And I find that a huge amount of what organizations are seeking when they're looking to be called agile. And I don't really like the term and we can maybe talk about that a little bit, but what they're looking to achieve really requires software architecture to do it. And so I'll leave it with that, but this is a hugely important topic to me. And so that's what motivates me to work with Pierre and to try to explore these ideas, so I think we can leave the rest of it for the podcast.

Thomas Betts: And Pierre?

Pierre Pureur: Hi. So like Kurt, I've been a software architect for longer than I can remember. I retired from the corporate world about a couple of years ago. I was a chief architect for a very large insurance company at some point, and this has given me time to actually reflect on all the things I did right and wrong during my career in software architecture, of course. And all the things that we do and don't necessarily make a lot of sense.

That's what led us to, well, first I co-wrote a book about five years ago on Continuous Architecture, where actually we introduced in that book the concept of minimum viable architecture, so these things relate. Then about two years ago we wrote another book called Continuous Architecture in Practice, may as well try to put it in practice. And after that, Kurt and I decided to explore a little bit more some of the ideas in that book and try to make the concept of continuous architecture or architecture in general, a little bit more practical and I would say implementable. So we're digging into that.

We have been lately, as you say, Thomas, we've been digging into the concept of minimum viable architecture which seems to be very, very promising. So we'll see.

Software Architecture: It Might Not Be What You Think It Is. [03:08]

Thomas Betts: All right. So I want to take us back to that first article that you wrote on the Continuous Architecture Series. It was titled Software Architecture: It Might Not Be What You Think It Is. And with a headline like that, got some people in the comments and discussion, a lot of conversation around the role and responsibilities of software architects.

So, what do you see as some of the common misconceptions about what software architecture is and what software architects are supposed to do?

Pierre Pureur: I think the first question in my mind is, is there a role called software architect? We talk about software architecture, we talk about software architect as a given. And I was myself, my job description for a long time was software architect. But the question is, is there really a role for that? So that's the first, and I'm not saying there is or there is not, but I think the people should really ask themselves, is that really the role?

There's a lot of talk about software engineering lately and the role of software engineer. And there's even a school of thought that believe that architecture is part of software engineering. And I've been witnessing a lot of the people I know well at company at Google for example, their job title moving from software architect to software engineer. They should do the same thing by the way, but the total change is, so that's another good question.

And then the big one for us is, should an architecture be developed upfront, no? As traditionally, the old concept of waterfall, I create architecture as a big bang or should we recognize that we can't really do it upfront? And should we really develop it as we go? Which makes people cringe, but that's really what's happening in reality.

Kurt Bittner: To that I would add, my experience working with agile approaches has led me to a point where basically, and we wrote an article about this later in the series. But it leads me to basically a skeptic's view, is to say that everything that anyone can say about the system is an assertion that may or may not be true. And with that, you have a set of requirements, those may or may not be true. You have a set of assertions about how you're going to meet those requirements. Those may or may not be true or may or may not be achievable.

And so everything that you do in building a software system basically needs empiricism to test the ideas. And then we circle back to the original article where we said, "The software architecture isn't a set of diagrams, it's not a set of review meetings, it's not a software architecture document, but it is a set of decisions that the team makes about some critical aspects of the system. How it's going to handle memory consumption, how it's going to handle sessions, how it handles throughput, how it handles security."

And those decisions get made whether or not you have a software architect role, whether or not you even recognize that you have a software architecture. Our assertion is that it's important to recognize that you do have a software architecture because of the decisions you make, whether or not you realize that or not, and that you're better off being explicit about those decisions and being explicit about your assumptions and then testing those assumptions. In a sense, what we're trying to do is inject empiricism and skepticism into the software architecture discussion.

Pierre Pureur: That is a very important concept beside the decisions. One thing we have to realize is, there is really no absolute right or wrong way in software architecture. Software architecture is really a series of compromises, what some people call trade-offs. And that makes some people uncomfortable because, if you start basically building software architecture and you recognize from the start that your software architecture may not be right, maybe just good enough, but just good enough. That makes people cringe a little bit. It's very uncomfortable.

So the concept of trade-offs, to me it's really what makes a good, I even hesitate to use the word architect anymore, but a good practitioner, good or bad. To be able to actually make the trade-offs when it matters and try to limit the downsides, if that makes sense.

Write down your architectural decisions using ADRs [07:14]

Thomas Betts: I think one of the quotes that I dug up when I was reviewing the old articles was, "Software architecture is about capturing decisions, not describing structure." And those diagrams, the boxes and arrows. And we think, "Well, that's the architecture." And you're saying that idea falls short and I think one of the places that introduced me to the concept of architecture decision records and the purpose of those being to write down those decisions.

Can you talk about that a little bit more about why that is maybe a better way of capturing the architecture than having just the boxes and arrows?

Pierre Pureur: Absolutely. Decisions are going to stick, unfortunately or fortunately, I don't know which one sometime, for a long time. And I think it's very important as we make decisions.

And reason for your question, which is what is an architecture decision versus a design decision? That's an interesting question as well. But as we make decisions, I think it's very important to record them somewhere. It doesn't really matter where, but it's important we keep a record of who made a decision, why, and when you decided for a way to go for basically what we call a solution, to understand also which one did you discard? Because you can learn more about what you discarded than when you selected, it's very interesting.

Where you keep your idea for sure doesn't really matter, but what we think is good practice is to keep it as close as possible to the code. So if you happen to use GitHub for example, it's a good idea to put your decision on GitHub because the closer they are to the code, the bigger the chances are that it will be maintained. The last thing you want is an ADR that gets stale because then the decisions are just meaningless at this point.

Kurt Bittner: The other thing, your question, one of the things that for me is implicit in it is, what is an architectural decision? So the simplest definition that we came up with is that, it's one that becomes in a sense exponentially costly to reverse.

And I don't mean it exponentially in the exact mathematical sense, but I mean that design decisions, the decision to use one component versus another. Relatively simple, you change a set of calls and it's relatively easy to replace. What's harder to change is the whole concept behind that. And what usually that means is that your significant data structures that you're passing around in modern parlance, the major classes that you use to exchange information between different parts of the program. Those decisions become very costly, your ways of dealing with concurrency, your assumptions about essentially timing of events and things like that. If those turn out to be wrong, you're talking in some cases complete rewrite.

And actually there's a situation that's worse than complete rewrite and that is if anyone has ever remodeled a house, you realize that it's more expensive than building a new house because you have to tear out all the old stuff and then you've got to put in all the new stuff. So you've got the cost of dismantling and the cost of rebuilding. And the same thing happens in software.

And so those architectural decisions are those things that are extremely expensive to change. And the importance of the architectural decision record or ADR, is that you ought to preserve for the future people who will work on this system, your thought process at the time. So they can look at that, and they can either agree with your decision or realize that decision doesn't apply anymore and we're going to have to make a different one. So I think that's the thing is, it's like leaving breadcrumbs.

Well breadcrumbs, maybe not in a Hansel and Gretel sense, but markers along the way that says, "I was here, this is why I made this choice." And then for people in the future, and that may mean a year from now, it might mean 20 years from now, it might mean 40 years from now. Because we do have some systems that are around 40 or 50 years now, and most of it you look at it and you have no idea why people made the decisions.

So anyway, that's sort of for me is why the decisions end up being important is because architectural decisions are extremely expensive to reverse, and it can be a mystery to figure out why you decided to do that unless you leave some rationale.

Thomas Betts: I think I described it to somebody as watching the Olympics, and imagine if all they showed was the people who got the gold medal. You don't see any other people, whether they got the bronze or silver or ran the race and you don't get to see the event at all, you just get the results. And sometimes what the diagram captures is, this is what has been decided. And going back and saying, well, why was that decided? And if you don't have it, you don't have those breadcrumbs, that explanation of why did we make this decision? Why did we choose this over something else and what were the other things we considered? Having that for the history is important because a year or two from now when somebody says, "This isn't working very well. Let's just throw it out and start over." Well, why do we not want to do that? Why did we go down this path? And maybe you make a small adjustment based on being able to see those previous decisions.

Understanding quality attribute requirements, QARs [12:00]

Thomas Betts: I think that gets us into the idea of you're talking about trade-offs and those trade-offs come down to the quality attribute requirements. One of those guiding principles you talk about for continuous architecture and a lot of the articles you've written are quality attribute requirements, QARs, that's things like performance, scalability, security, resilience. Some people call these the -ilities, right?

So why is that important to think about early on? Why do you need the QARs early, even if you're building an MVP or something that's just going to have a few users or a small workload?

Pierre Pureur: Any architecture is very driven by QARs, I mean there is no... If all you have is the set of functional requirements, then any blob of code will do. Unfortunately, you won't be able to run it, but it will do. The QARs are really, and I think that's being recognized much more than it used to be 10 years ago, but now people realize that QARs are important. The challenge QARs is, it's really hard to bring them down. And you get into a paradox which is, you need QARs to make decision to build an architecture, but you don't really know what the QARs are.

Take scalability, for example, okay. When you start a system where you try to guess, and I use the word guess on purpose, try to guess how much, where should you scale your system is a guessing game, nothing anymore.

And unfortunately, so nowadays people just solve that by saying, "I'm just going to do put that in the cloud somewhere." It becomes a cloud pointless problem, not my problem, it's just to sign a check. But that doesn't work very well, clearly.

And part of the problem with scalability for example, is that you may want to scale up or scale down depending on how successful your product is or is not. And hence the problem because actually it's difficult to scale up, it's even harder to scale down. So you may be stuck with an architecture which basically you don't need anymore.

So reversing, back to reversing the discussion, reversing a decision that was made to make your systems scalable to say, I don't know. I decided I should be scalable. I should be able to handle a gazillion transactions a day. And oops, I didn't get a gazillion transactions, what do I do now? So you basically are stuck with consequences of your decision.

And also that led us, and we're probably not going to talk much about that today, but that led us also to try to understand, what is the impact of architectural decisions on technical debt?

Kurt Bittner: The other thing about QARs that's interesting, before I introduced this idea of skepticism. And so you have to also question the QARs because basically what well-meaning, the people who are paying for the system, I'll just call them the business people. But they might say, "Well, we want the system to be infinitely scalable." Well then, I can almost guarantee it's going to be infinitely expensive.

And so the problem is that the QARs you get might be right or they might not be right. They're basically what the people who are giving you that information believe is true at the time and they mean well, but they might not actually be what you need. And so as you deliver product increments, and we'll talk about the MVP-MVA pair later. But as you deliver increments of the system, you learn about performance, scalability, security and all of these other qualities, if you're looking to learn. So you have to build tests in to learn about those things.

But you learn about these things and then you might discover that the QARs aren't right, that they're over-specified and you need to scale them back, or you might learn that they're not aggressive enough and they need to be amped up. So there's this constant interplay in the information that you have available, both the QARs and your ability to respond to that.

I guess the important thing for me is that the initial QARs are a starting point and you learn more about it, just as you learn more about what you build in the performance of the system which is complex. And you can't always predict the interaction between things at the beginning. And you also might find that you have two different QARs and they're basically in conflict. You can't achieve one and achieve the other. And so that's where you get into trade-offs, and not just trade-offs in the design of the system, but also trade-offs in the actual QARs themselves.

Testing your quality attribute requirements [16:12]

Thomas Betts: Right. So you need it to be more performant or less performant, but now we have new security requirements, so we cannot budge on those and so we have to sacrifice a little bit of performance because security requirements are there. And I think you mentioned a key thing is you have to test these. And I can't remember if it was in one of your articles or some other thing on InfoQ got me to the idea of basically a scientific method to testing these, that you form a hypothesis about your QARs, like we think we need this performance or we think this is going to be adequate. But until you write it down and test it and then check the results and have that feedback cycle, I think we see that done fairly well in product functionality. We're going to put this in front of the customer, we're going to do AB testing, some way to figure out, is this the feature that they need? You're talking about the same sort of for the architecture requirements, testing those, right?

Kurt Bittner: I encountered this really early in my career. So after being a developer then I went to work for a large relational database company, and almost the first thing that I did was performance benchmarking of different system configurations. And you learned a lot about how to tune and how to improve performance. And sometimes what you found is in a sense that the relational database design was wrong, you needed to denormalize it or you needed to do something. But that notion of testing it, actually, not just looking at it on paper, but actually building at least a prototype of it, if not a small portion of the real system and testing it ends up being really important. And then you can extend that idea to virtually everything.

And the art of architecture in a sense is trying to figure out what's the minimal subset of the real system that you need to build, figure out whether your architectural hypotheses are correct.

Pierre Pureur: And I think this is a critical point because if I recall back to my years in that insurance company, we tended to really test, yeah, we tested, but it really happened late in the development cycle. So one, we can refresh on oneself and to say, "Wait a minute." At that point, if you find something really drastically wrong, what are you going to do about it? Probably not much because you're committed to a certain delivery date and you have to make that date.

I think testing, building as little as possible a system of the architecture really, and testing it as soon as possible is critical. Very important point.

Minimum viable architecture, MVA [18:25]

Thomas Betts: Well, I think that gets us, I hinted at a little bit the minimum viable architecture. People are fairly familiar with the term minimum viable product, but I also know that that's one of those terms, MVP, that gets thrown around casually. And maybe let's start with that just to get on the same page of what an MVP is. What is an MVP and what is it not in your mind before we start talking about the architecture?

Pierre Pureur: So Kurt and I were working on, I don't remember when that happened. That happened probably maybe a year ago, a couple of years ago, when we were working on one of the first MVP and MVA articles. And we said, "Wait a minute." Most people think MVP is something for startups. If I'm startup, I want to roll out a minimum viable product as soon as possible to test my customer base. And the product doesn't need to be clean, it doesn't need to be pretty, it can just be something that I can put in the hands of customer, it could be just a mock-up. But more important, I want to find out whether my customers want to buy or not.

And we started thinking and said, "Wait a minute." The definition is limited because if you expand it and you say, "Now I have a new product. I'm an established company and I have a new product I want to roll out to my customers." Will it make sense to start releasing by little parts if possible at all, little bits of product and try to test the impact on my customer base? And before it's too late, when I basically find out that my customers aren't buying what I'm trying to sell, I can back out and change direction.

The MVP concept became to us more like something that we can actually, we take a big release and we cut it down in smaller parts and each smaller part becomes an MVP. And then of course we extend your definition and say, "Okay, if that applies to MVP, why not really have a similar definition for architecture an MVA? So each MVP has an associated MVA. One of the articles we talk about two climbers linked by a rope and the climber basically a concept.

Kurt Bittner: I got to expand on a couple ideas in that in Scrum, which I'm most familiar with. The intent of every sprint is to produce a potentially valuable product increment. Well, essentially every sprint or every product increment is really an MVP when you think about it, testing a set of assertions about the value of things that you are developing in that increment.

It was a natural extension for us to think about, "Well, how much architecture do you need to support that MVP?" Well, the way that we came around to it is that the MVP and the MVA are linked, but how much architecture you need is in a sense your MVP. If it's successful, it's kind of a contract that you make with your customer, saying, "Here's a bunch of functionality that we're delivering to you, if you like it." We're in a sense committing that we're going to support that for the life of the system or the life of the product.

Ah, okay. Well, that gives us some guidance on the MVA is that we need enough architecture to make that statement true. To say, "Yes, we could support that," doesn't mean everything has to be in place. We could say, "Yeah, at some point the number of users is going to grow beyond a certain point. We're going to need to rip out this and bolt in that." But we think that the work associated with doing that is within in a sense the acceptable cost of the system. So okay, we could accept that. Then our MVA doesn't need to be super scalable right now, but as long as we have a path to super scalable, if that's ever needed, we could get there and so on.

So that notion that the MVP and the MVA march along almost like these two people linked by rope. The MVP could get a little farther ahead at some points, and the MVA could get a little farther ahead at some points, but they're linked together because you're making these commitments every release that the system is going to be supportable.

Anyway, so that's the idea. And then maybe the second thing to mention about the MVP, is that I think some people think about it as a throwaway prototype, and we don't. We think about it as it's an initial commitment to basically support the functionality that you're delivering.

And so many times I've seen organizations and teams make this mistake is that they think that the MVP is a throwaway, but then the user loves it and they go, "Well, let's put it into production next week." And the team is just freaking out because they know the system won't support that. So rather than getting yourself into that problem in the first place, if you make sure that the MVA matches up with in a sense the expectations behind the MVP, then you've got a chance for incremental releases that don't disappoint people.

Thomas Betts: I like the idea of that rope analogy, of two people that either one can be in the lead, but it can't be so far out. And it goes back to the idea you said, not having the waterfall, big bang architect everything up front, the architecture didn't go off running ahead and blaze the trail, and now the product gets to follow it. Because the problem with waterfall was always, if that trail that you blazed wasn't correct and you didn't know until you started developing it, then what do you do? And that's why you want to keep it closer.

So yes, you can go forward with some of your architecture decisions, but having that balance of, what do we need for the next two weeks, the next three months? Whatever you're looking at that really constraints, don't over architect it.

Look far, look near [23:37]

Pierre Pureur: And associated to that is the realization that you have to realize some of your or most of your decisions may be wrong at some point or may become wrong at some point. So the question you have to ask yourself is, what happens if they are wrong and what do I need to do to reverse them? And it was a scary thing.

Kurt Bittner: There's an analogy, when I was in my 30s I was really gung-ho on whitewater kayaking. And there's a technique you learn pretty quickly and you can describe it as look far, look near. So you need to look far to see where you ultimately need to go and you can't let your short-term decisions get you trapped into a place that would become undesirable or even very dangerous. And yet that rock that's in front of you immediately, you need to get around that thing. So you've got to look near to make sure that the near-term decisions are correct, but you also have to look far enough ahead to make sure that you're not essentially making decisions that are going to put you in a bad place. And in the product sense, in a bad place means that the product won't be supportable anymore or it won't be viable anymore.

I think that if you think, look far, look near, it gives you some idea that you're doing releasing product increments. You can't completely ignore where you think you're headed in 18 months, but you also can't just make sets of two-week decisions and never look ahead. But you can't completely look ahead because you're going to end up on a rock and bound in a place you don't want to be either.

Pierre Pureur: Another trade off.

Continually test earlier architecture decisions [25:03]

Thomas Betts: Pierre, you said sometimes you make the wrong decision, and we can always say it's right and wrong, but there's also no right and wrong answers, that it's always trade-offs. And it comes back to the idea of if you have some way to test it, you didn't find out that you were wrong, you found out we didn't meet our goals. Or, our architecture requirements said this, and our new product that we did in the last two weeks, the last month, we can't support it, we need to change something. But that gets to the smaller changes right? That this is the minimum viable architecture and maybe the next sprint you're going to focus on making the architecture catch up to where the product went. Is that right?

Pierre Pureur: Mm-hmm.

Kurt Bittner: That underlines one thing is that for developers, it's good to become familiar with either load testing tools or to be able to write your own load testing drivers because ultimately, you need a way to simulate those loads that you might get at some point. And being able to do that with some randomization, so that you don't create systemic biases in your testing itself is a good skill to come up with. And that gets into cross-functional skills on the team. You can't basically enlist in another testing group to do this for you. You as a developer need to develop the skill to test your own software, and in architectural sense that a lot of times means different kinds of load factors on the system.

Thomas Betts: Well, I like that goes back to the idea that we had started talking about at the beginning that, is there a role of an architect? And you said, "Every engineer has to have some responsibility of doing that, and that being able to test it is one aspect of that. If you're going to come up and make the decision, you need to be able to validate those decisions and that anyone on the team can theoretically do that work." And again, don't hand it off to the architect to figure out or the load test team to figure out, it's part of the product.

Using a framework can hide some architecture decisions [26:44]

Pierre Pureur: Absolutely. One thing, Thomas, we actually did mention when we're talking about decisions, is the concept of decisions that are being made for you. What I mean by that is when you use a framework, when you use any external open software, some decisions were made when that software was built or the framework was built. And people don't necessarily realize that by using that framework, that software, you actually end up having that framework making decision for you. And there are consequences to that as well, almost hidden decisions that come back and haunt you at some point. And security is of course an obvious one.

Thomas Betts: And using those frameworks, that's another trade-off. Maybe it gets us there faster because a lot of this infrastructure and the low code was already taken care of for us and we didn't have to write that. But maybe that will only meet our needs for the first year and then we have to come back and revise it.

Don’t abdicate your architecture decisions to an AI [27:37]

Pierre Pureur: Yes. And when I hear one more people relying on AI to help with architecture, therefore helping with decisions, I get a little scared because three of us it happens quite a lot on chatbots, right? I use Gemini quite a lot every day, and sometimes you get plain wrong answers. The problem is sometimes it's easy to check, for example, if you ask some information which is available on the web, you can double check it easily. When you use that to build an architecture, this becomes a little bit of a dangerous situation.

Thomas Betts: I think when ChatGPT first came out, I did have it write an ADR for me just to see what it came up with. And it was in the ballpark, it wasn't completely off base, but I also knew enough because I had a write one that I had already written. So I knew the answer I'd come up with and I could see like, "Well, here's where I would ask it more questions." And if you treat the chatbot as the rubber duck, the pair programmer, and have the conversation with it, then it's useful. But I think you can't have the chatbot play the role of the architect and then you don't have any responsibility. It's just another tool to help you make these decisions.

Continuous architecture [28:41]

Thomas Betts: So everything we've been discussing I think has built up to the idea of continuous architecture and I wanted to wrap up with that. What exactly is continuous architecture? What's the core idea, and what are the benefits that you see that it provides?

Kurt Bittner: If we go back to a few things that we talked about, architecture is about making decisions. So then continuous architecture means continuously examining, testing and proving out the decisions that you're making about the system. It's easy to associate it with different kinds of automation, like continuous integration or continuous delivery. And those are important technologies and maybe continually assessing the quality of your decisions.

For example, you might build in a set of tests that analyze the load performance of the system and you build that into your continuous integration pipeline. And then in every single build, you get feedback on whether that system is actually doing it. You want to do that testing in the background by the way, you don't want to have it be synchronous.

I think that you can utilize these different continuous something technologies to assess the quality of your decisions continuously. But it's also a mental frame of mind that you adopt about continually questioning your QARs, continually questioning the ability of your design to meet those QARs, and then continually gathering feedback to see if your decisions are right and continually making trade-offs.

So in a sense, you could net it out to say you're never really done with architecture, you're always continually reassessing whether the architecture is still suitable. And that's why we wanted to pair up that minimum viable architecture with the minimum viable product. So in every single release, you should be asking yourself, "Are our decisions still valid? How do we know? And what evidence do we have that supports that?" And it's not just a set of beliefs. So that's what it means for me, is continuously testing your assertions and your beliefs about the system's sustainability.

Pierre Pureur: Absolutely. I think that the whole concept is architecture is never done and therefore, you've never done with decisions. Decisions in themselves will evolve. You'll have more decisions, fewer decisions. They will change unfortunately, but that happens. Architecture will evolve. And you don't really have a point of time when you make a decision. You really have this continuous flow of decisions that are being made over a lifetime of the product.

Thomas Betts: And I think the idea of expecting it to change over time changes your mental model. I think, Kurt, you were saying that if you go in, assuming you can make all the decisions up front and you're going to be right if you just spent enough time analyzing it, you're bound to be wrong in some of those. And rather approach it with the idea that, "We are going to be checking every so often." And it gets you into thinking I can make smaller decisions, and how do I validate those decisions? And all of that just affects your whole process. And that's something that the whole team needs to embrace, not just the one ivory tower architect, right?

Pierre Pureur: Absolutely.

Closing [31:34]

Thomas Betts: Well, I think that's a great place to wrap things up. Kurt and Pierre, thank you both for joining me today.

Pierre Pureur: Thank you.

Kurt Bittner: Thanks for having us on.

Thomas Betts: And listeners, if the subjects we talked about today are interesting and you want to read more or join the discussion, please go to infoq.com. There you can find all the articles written by Kurt and Pierre, and you can leave a comment on any of those articles or a comment on this podcast.

So thanks again for listening, and I hope you'll join us again soon for another episode of the InfoQ Podcast.