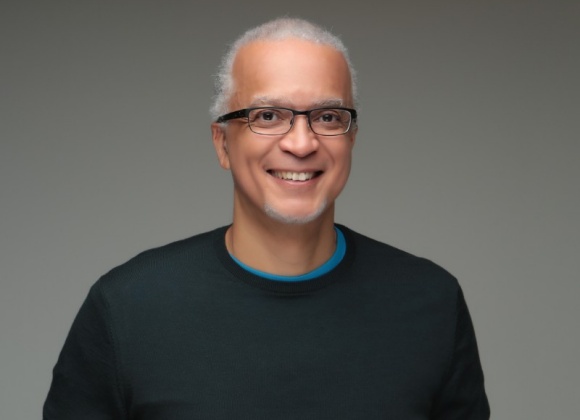

This week's podcast features Yao Yue of Twitter. Yao spent the majority of her career working on caching systems at Twitter. She has since created a performance team that deals with edge performance outliers often exposed by the enormous scale of Twitter. In this podcast, she discusses standing up the performance team, thoughts on instrumenting applications, and interesting performance issues (and strategies for solving them) they’ve seen at Twitter.

Key Takeaways

- Performance problems can be caused by a few machines running slowly causing cascading failure

- Aggregating stats on a minute-by-minute basis can be an effective way of monitoring thousands of servers

- Being able to record second-by-second is often too expensive to centrally aggregate, but can be stored locally

- Distinguishing between request timeout and connection/network timeouts is important to prevent thundering herds

- With larger scale organisations, having dedicated performance teams helps centralise skills to solve performance problems

Subscribe on:

Show Notes

Yao Yue is a software engineer at Twitter. Much of her career has been around caching systems like memcached, or Twitter’s fork of it, and Redis. Recently she stood up a new performance team at Twitter. Today’s podcast will talk about the charter of the team, where and how you should be instrumenting for performance monitoring, and metric aggregation and interesting problems Twitter has seen.

How did the team come about?

- 01:45 I decided that what I did with cache, which is performance work, was more widely applicable for a large array of workloads.

- 01:55 We started looking at performance problems within Twitter, and the best way to do that is to start a dedicated team.

So what’s the charter of the team?

- 02:10 There are two things: one is to look at the infrastructure we have and the performance problems that affect pretty much everybody, and see what we can do to improve the performance.

- 02:25 The other is to build infrastructure for performance.

- 02:30 When people start talking about performance, they want to see what can be improved and how.

- 02:40 The first roadblock is they have no idea where things are bad - visibility leads to insight and insight leads to improvement.

What does building performance infrastructure look like at Twitter?

- 03:00 The status is that we have monitoring - observing the health of both hosts and services.

- 03:10 The infrastructure has limitations, and are most felt when you want to debug performance issues.

- 03:25 There might be micro spikes in traffic which might last a few hundred milliseconds, but can be obscured when you are monitoring at the granularity of a minute.

- 03:45 That calls for higher fidelity when monitoring, which is not provided by a general purpose monitoring system.

- 03:55 There are certain requirements for performance monitoring which are not generally available in a generic monitor.

- 04:10 We also look at a long trend, which only ties in loosely with performance monitoring - usually performance is about latency.

- 04:15 We also care about efficiency and capacity planning, which needs a long term view as well as the short term.

- 04:30 For example, does pushing out a new version cause a gradual performance decay?

- 04:35 Over the course of a year or two the performance degradation can become significant.

- 04:40 A human operator, before and after the launch, may not notice a difference right away - but if you look at the long-term trend it may become obvious.

- 04:45 So we’re looking at how to automatically monitor the long term trend.

What’s the scope of this - does machine learning come into it?

- 05:00 We’re considering machine learning as one of the tools - that can convert data into insight - but machine learning is a nice buzzword.

- 05:20 First, we make sure that we have data and that data is clean and trustworthy.

- 05:25 Second, is to be able to derive something more meaningful from the huge amount of data.

How do you define latency?

- 06:10 Latency is not a purely objective thing - people tend to think of latency as a measurement.

- 06:20 In my opinion, latency is a collection of measurements depending on where you are measuring.

- 06:30 For example: if you have a service that has a fairly deep stack, using some framework with libraries, perhaps on a virtual machine or a container on hardware connecting to a network.

- 06:45 If you measure your latency at the top of that stack, it needs to traverse this very deep stack to get to the service that you’re interested in.

- 07:00 That latency will include all the work, and all the slowdown, that occurs in the software stack, the hardware stack, the network stack and back.

- 07:10 That would look very different if you were measuring as it goes out to the network, like sniffing the network packets, and take the timestamps of when it goes out and comes back, you’ll end up with a different numbers.

- 07:25 What matters to application developers is probably the latency that they observe wherever they are interacting with the system.

- 07:35 Identifying where the latency is coming from often requires having multiple instrumentation entry points.

- 07:45 If you want to know whether your local stack is slow, or whether it’s the remote services that is slow, or the network is glitchy, you’ll have to insert other measurement entry points to find out.

How do you tackle performance problems with your teams?

- 08:30 What we have done in the past is have customers who are using JVMs, with GC cycles, with perceived latency numbers that are very high.

- 08:45 What we do to help them to find out whether it’s a client-side or a server-side issue, is that we start monitoring traffic on the host, like tcpdump to capture the flows sent by the application.

- 09:05 We compare those numbers with the numbers that the clients are observing at the top, and if there is a huge discrepancy in this case we can identify that the latency is coming from the JVM in this case.

- 09:25 Sometimes you can dive deeper into the stack; for example, if you’re using a library, you can instrument that.

- 09:35 You can then turn it on at a lower level in the server stack and see if it compares with what the application latency is.

- 09:45 If you have control of the box then you can do it on the system side as well.

What are you doing to instrument at each of the different layers?

- 10:00 It’s hard to be very thorough about this; it’s difficult to instrument layers that you don’t control.

- 10:10 It should only be done when there’s a need: usually when there’s a problem you’re trying to identify.

- 10:30 We’re looking to find outliers in our system.

- 10:40 We can do instrumentation on a case-by-case basis.

- 10:45 When you have a large scale operation, what tends to happen is you have 99% of instances or time things run just fine.

- 11:00 There’s always one hotspot, or on instances somewhere that seems to be throttled all the time, or causing reliability issues.

- 11:10 These are difficult to surface when you have 10,000 instances running.

- 11:20 Another issue is - we were talking about general monitoring earlier on - those tend to give a fairly coarse, big picture view but aren’t good at zooming in to one or a few instances which are having problems.

- 11:40 You can’t afford to collect everything at a finer grain, so the ability to know where and when to zoom in, and having the flexibility to dial up or dial down the information collected provides a lot of value when it comes to chasing the outliers.

What does latency and triple nines mean?

- 12:15 Latency - as we talked about earlier - can mean measurements at different levels of the stack.

- 12:30 Tail latency is a term related to P999 and P99 latency is used because the mean or the average isn’t very useful.

- 12:55 This comes back to the service level agreement or SLA, which might have a requirement that 99.9% of requests might have to come back within 500ms with a successful result.

- 13:15 This maps naturally to tail latencies - because anything that takes longer than 500ms is going to fail.

- 13:30 In this case latency translates to success or failure.

- 13:35 Where you have a lot of instances there may be a lot of variability, so often you will have one or more instances that doesn’t behave the same way, and they can eat into tail latency.

- 13:55 Tail latency means the slowest portion of all your requests and how long they take.

- 14:05 P99 means that 1% of all the requests will take longer than this number.

- 14:15 P999 means that 0.1% of all the requests will take longer.

- 14:30 There was a good article in the ACM communications called “Tail at Scale” - by one of the chief architects at Amazon.

- 14:50 Scale exacerbates tail latency, because often when you fulfil a complex request you go through multiple services.

- 15:05 Those services may need to fan out to multiple instances to fulfil the request.

- 15:10 This is like caching - if you need ten things, you are probably hitting ten servers to get that information.

- 15:15 If any of those ten requests returns slowly, your overall request comes back slowly.

- 15:25 This very simple and easy understandable problem, where you have a sufficiently large scale, can result in a huge performance problem.

- 15:40 You have a much bigger chance of hitting a slow path or a slow machine which brings down the overall performance of your service or product.

What is the process for dealing with thousands of servers?

- 16:20 With a few servers, it’s possible to be simultaneously observing all of them.

- 16:30 When you have a much larger scale operation, the bottleneck is aggregating all that data into one place.

- 16:45 Whoever is looking at that system cannot be simultaneously be looking at thousands of servers.

- 16:50 This funnelling of information from a wide scope down to a single location is the bottleneck, and is the key difficulty in designing monitoring infrastructure at scale.

- 17:10 When you have a large scale system you cannot be collecting everything all the time.

- 17:20 What ends up happening is that you end up collecting very coarse information on every instance all the time, but you do want to get a lot more information about a specific host or location in your system.

Should you aggregate information before you send it to the collection point or after?

- 17:50 In production, what has happened at Twitter is people always want more visibility, especially when they run into performance issues.

- 18:00 When people see a micro incident or a micro spike, it could be a traffic spike that lasted less than a second, but it could cause disruption for other services.

- 18:25 If you look at the minute-by-minute metrics you may not see it.

- 18:35 A bump that happens in half a second is totally different to one that raises it up over a minute - they are different problems.

- 18:50 Being able to differentiate between something that is short and spiky and something that is long and smooth is the key to a lot of performance problems.

- 18:55 You can’t get that from minute-level aggregations.

- 19:00 However, if you dial it up from a minute-level to a second-level you generate 60 times as much data as you would, and most of the time you don’t need that level of data.

- 19:15 Monitoring is very expensive when you are collecting large amounts of data.

- 19:25 The solution is we should aggregate a limited amount of metrics on a coarse enough level - the monitoring service shouldn’t cost more than the service that it is monitoring.

- 19:45 We don’t need to sacrifice visibility into finer-grained activity.

- 19:55 Getting everything into a central place is the expensive part of the operation.

- 20:00 Maybe you don’t to have to aggregate everything - maybe you just need enough information in the aggregation to tell you which instance is the suspect.

- 20:20 We have so much memory and CPU lying on every host, and those hosts are rarely running at full capacity.

- 20:30 That can be used for something good if we know how to tap into that without affecting the purpose of the host.

- 20:50 For example, most container hosts don’t run out on disk space.

- 21:05 This was something I relied on when working with caching systems.

- 21:15 We only aggregated data on a minute level of resolution, but would often see small spikes of requests.

- 21:30 We had high-performance logging that dumped the data to disk in an asynchronous way so that we didn’t interfere with the host process.

- 21:50 Because people tend to ask about performance problems just after they have happened, what if we cache only a short period of data?

- 22:05 We don’t even need to cache a full day - people tend to come to the host within an hour of the incident occurring.

- 22:10 If we store information on the local disk and keep them there for a few hours to few days, we can build that and apply analysis on these local data stores to get the answer.

- 22:30 The data doesn’t need to leave the box because performance issues are often edge cases and isolated in a few places in your operation - they aren’t everywhere.

- 22:40 We translate what it a large-scale monitoring problem into a local problem.

Do you log into just a few servers then?

- 23:10 That one instance may be what is causing enough failures to cause the problems for your services.

- 23:25 We look up the aggregated data, and find out what instance is seeing more traffic on a minute-by-minute basis, and whether it’s spread out or a minute or all in one second.

- 23:45 That’s information that can be passed on to the customers to see if there’s a particular issue - for example, people reading Taylor Swift’s tweet, they can add additional caching to solve the problem.

What are some of the interesting problems you’ve seen at scale?

- 24:25 One of my favourite was the DDOS incident that we have. Ellen DeGeneres took a selfie picture at the Oscars and encouraged her followers to retweet it in order to break a record on Twitter - and it actually broke Twitter.

- 24:55 They generated enough traffic that some of the services started timing out - to one particular cache server that hosted that tweet.

- 25:10 When you have a lot of requests hitting that one server, the requests started timing out and then retrying.

- 25:25 When you retry you tear down the connection and create a new one, because you don’t know what the state of that connection is.

- 25:30 The clients decided that they wanted to close their connections and then open them again, which was quite expensive.

- 25:40 When you have thousands of clients trying to open connections to a single server at the same moment, the server falls over.

- 25:50 It’s not that we couldn’t serve the requests fast enough - we didn’t even see the requests.

- 26:05 Before we could accept them, they got timed out. So we had to restart some machines.

What was the retrospective on that one?

- 26:20 There were a number of things; we changed the behaviour on both the client side and server side.

- 26:25 If we cannot accept these connections fast enough, we shouldn’t even try.

- 26:30 It’s much better to tell the clients to go away, and handle the subset of connections that we can process.

- 26:40 That resulted in a number of iptable moves that rate-limits the number of connections that reaches the service.

- 26:50 Everything else we just reject straight away.

- 27:00 On the client side we did major changes - we realised that closing a lot of connections every time we saw a timeout was a bad idea.

- 27:05 A better strategy is to treat request timeout differently from other connection health checks.

- 27:20 We introduced a pipelining query - instead of opening multiple connections when we have multiple requests, we funnel them through the same pipe (which means we don’t have to create multiple connections).

What is the biggest problem today facing software developers?

- 29:20 I hope that one day there is a community that can surface some of the common performance problems and their solutions.

Mentioned

Twitter content on InfoQ

The tail at scale artilce on ACM.