Microsoft released for preview a series of updates to its Azure Monitor OpenTelemetry Exporter packages for .NET, Node.js, and Python applications. The new features include: exporting OpenTelemetry metrics to Azure Monitor Application Insights (AMAI), enhanced control of sampling for traces and spans, and caching and delivery retries of telemetry data on temporary disconnections to Azure Monitor Application Insights.

Azure Monitor is a suite of tools for gathering, analyzing, and responding to infrastructure and application telemetry data from the cloud and on-premises environments. AMAI is one of the tools within Azure Monitor, and provides application performance monitoring (APM) to its users. In addition, Azure Monitor Application Insights supports distributed tracing, one of the pillars of the Observability paradigm, across multiple applications.

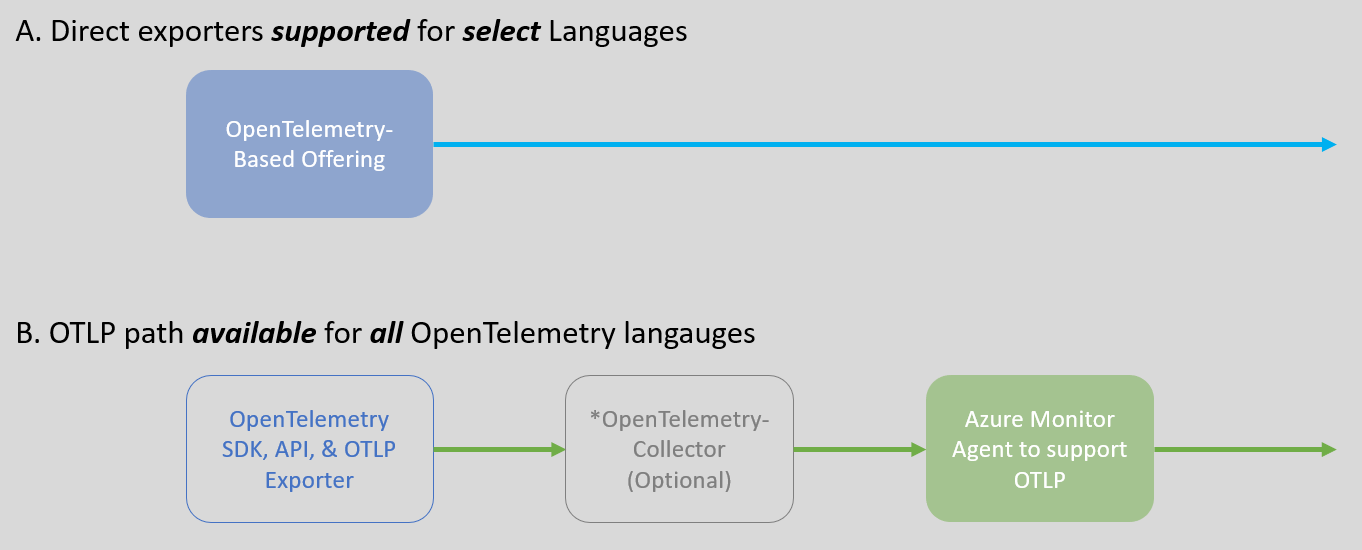

OpenTelemetry is a framework that provides vendor-agnostic APIs, SDKs, and tools for consuming, transforming, and exporting telemetry data to Observability back-ends. In a blog post in 2021, Microsoft outlined its roadmap for integrating OpenTelemetry with its wider Azure Monitor ecosystem. The immediate focus of this was to build direct exporters from OpenTelemetry-based applications to AMAI as opposed to the OpenTelemetry de facto route of an OTLP exporter to Azure Monitor via the OpenTelemetry Collector.

A sample of the direct exporter in a Node.js application with OpenTelemetry tracing in place would be:

const { AzureMonitorTraceExporter } = require("@azure/monitor-opentelemetry-exporter");

const { NodeTracerProvider } = require("@opentelemetry/sdk-trace-node");

const { BatchSpanProcessor } = require("@opentelemetry/sdk-trace-base");

const provider = new NodeTracerProvider({

resource: new Resource({

[SemanticResourceAttributes.SERVICE_NAME]: "basic-service",

}),

});

provider.register();

// Create an exporter instance

const exporter = new AzureMonitorTraceExporter({

connectionString:

process.env["APPLICATIONINSIGHTS_CONNECTION_STRING"] || "<your connection string>"

});

// Add the exporter to the provider

provider.addSpanProcessor(

new BatchSpanProcessor(exporter, {

bufferTimeout: 15000,

bufferSize: 1000

})

);

With the release of the new updates to the Azure Monitor OpenTelemetry Exporter packages, exporting metrics to the AMAI would now be possible, as shown below:

const { MeterProvider, PeriodicExportingMetricReader } = require("@opentelemetry/sdk-metrics");

const { Resource } = require("@opentelemetry/resources");

const { AzureMonitorMetricExporter } = require("@azure/monitor-opentelemetry-exporter");

// Add the exporter into the MetricReader and register it with the MeterProvider

const provider = new MeterProvider();

const exporter = new AzureMonitorMetricExporter({

connectionString:

process.env["APPLICATIONINSIGHTS_CONNECTION_STRING"] || "<your connection string>",

});

const metricReaderOptions = {

exporter: exporter,

};

const metricReader = new PeriodicExportingMetricReader(metricReaderOptions);

provider.addMetricReader(metricReader);

);

To manage the amount of telemetry data sent to Application Insights, the packages now include a sampler that controls the percentage of traces that are sent. For the Node.js trace example from earlier, this would look like:

import { ApplicationInsightsSampler, AzureMonitorTraceExporter } from "@azure/monitor-opentelemetry-exporter";

// Sampler expects a sample rate of between 0 and 1 inclusive

// A rate of 0.75 means approximately 75% of traces are sent

const aiSampler = new ApplicationInsightsSampler(0.75);

const provider = new NodeTracerProvider({

resource: new Resource({

[SemanticResourceAttributes.SERVICE_NAME]: "basic-service",

}),

sampler: aiSampler

});

Finally, in the event of connection failures to AMAI, the direct exporters write their payloads to local storage and periodically attempt redeliveries over a 48-hour period. These settings can be configured on the instantiation of an exporter, as shown below:

const exporter = new AzureMonitorTraceExporter({

connectionString:

process.env["APPLICATIONINSIGHTS_CONNECTION_STRING"],

storageDirectory: "C:\\SomeDirectory", // your desired location

disableOfflineStorage: false // enabled by default, set to disable

});