In this episode of the InfoQ podcast Charles Humble talks to Michael Perry about his book "The Art of Immutable Architecture". They discuss topics including the eight fallacies of distributed computing: a set of assertions made by L Peter Deutsch and others at Sun Microsystems describing false assumptions that programmers new to distributed applications invariably make. Other topics include Pat Helland’s paper "Immutability Changes Everything", Eric Brewer's CAP Theorem, eventual consistency, location independent identity, and CRDTs. They also discuss how the approach to building distributed systems advocated by Perry could be introduced to a real-world enterprise app that needs to integrate with mutable downstream systems.

Key Takeaways

- Building systems in an immutable way means you can decouple the idea of thinking about the topology of your system from thinking about its behavior and its data.

- Central to making this approach work is the idea of location independent identity - identities that don't care about where the data is stored.

- Eric Brewer's CAP Theorem states that for the three properties that you want in a distributed system - consistency, availability, and partition tolerance - it is only ever possible to simultaneously achieve two of them. We typically relax one constraint, for example consistency, in real-world distributed systems.

- CRDTs, such as treedoc used for Google Docs, are a data type that allows us to relax consistency via a consistency guarantee that's better than eventual consistency, called strong eventual consistency.

- One of the side effects of not following the principles of immutability is that the system might not be idempotent. The outbox pattern is one way we can minimize the probability that this happens.

Subscribe on:

Transcript

Introductions [00:21]

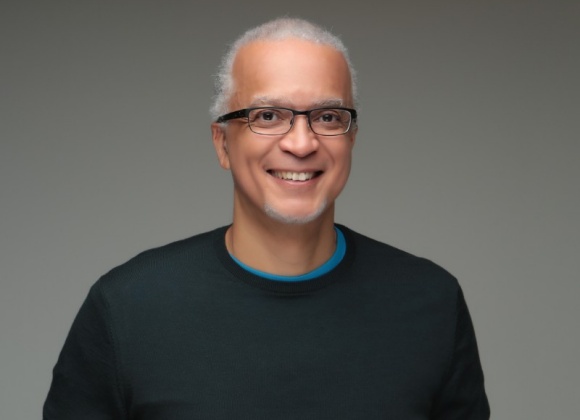

Charles Humble: Hello and welcome to the InfoQ Podcast. I'm Charles Humble, one of the co-hosts of the show, and editor in chief at cloud native consultancy firm Container Solutions. My guest today is Michael Perry. Michael is principal consultant at Improving Enterprises. He's recorded a number of Pluralsight courses, including cryptography fundamentals and patterns for building distributed systems for the enterprise. He's also recently published a book with Apress called "The Art of Immutable Architecture", which I found a fascinating and I also thought very timely book, thinking about how we go about building distributed systems and how we could maybe do that better. And it's that we're going to talk about today. Michael, welcome to the InfoQ Podcast.

Michael Perry: Thank you, Charles. Pleasure to be here.

How much were you influenced by Pat Helland’s "Immutability Changes Everything” paper and the Eight Fallacies of Distributed Computing? [01:01]

Charles Humble: There are a couple of things you referenced early on in the book which I thought we should also mention to give listeners some context. The first of those is Pat Helland's paper, which is "Immutability Changes Everything", which was published by ACM in 2016. And then the second is the Eight Fallacies of Distributed Computing, which came out of Sun Microsystems. And I'll be sure to link to both of those in the show notes. I was interested as to how much those two pieces of writing were influential in terms of your thinking as you were developing the ideas in the book.

Michael Perry: Yes, I would say that the fallacies have influenced my thinking for most of my career. I've made those mistakes a number of times. So when I started working on the book, I really wanted to help people to recognize when they're making those mistakes in their own work, when they're making an assumption that falls right into one of those fallacies. And then as I was taking a look at Pat Helland's paper on immutability, Immutability Changes Everything, he really went through a series of places where we have used immutability in order to solve some real-world problems, from how hard drives are organized all the way up through how computer networks work. And I found that immutability really was a key factor in addressing those fallacies within all of those various systems.

Why do we default to mutable systems? [02:13]

Charles Humble: Speaking for myself, I know that I kind of naturally default to mutability when I'm thinking about systems, and that may be because a lot of my programming background is in object-oriented languages, languages like Java and C#. But I suspect that that's a very common thing. I think that's what we typically do as programmers unless we've worked mainly in functional programming. And I was curious as to why you think that is? Why do we default to mutable systems?

Michael Perry: There's really two reasons. One, that's kind of the way that the world works. We see things that are changing all the time. And a lot of what we're doing in software is solving a problem by modeling the real world. So if we model it with mutability, that just seems to be the natural choice. The other side of that is the way the computers work. Computers have memory that you can change the value in, they have hard drives we can overwrite one file with contents from another file. So they work in that mutable style. So applying those mutable tools to mutable problems just seems like the right choice. Why would you take this detour through immutability in order to come back and model a mutable world? It's counterintuitive.

What is immutable architecture? [03:18]

Charles Humble: Immutable architecture is a very interesting title. We've said already that most programmers come across the idea of immutability through functional programming predominantly. So given that, how do you then take those ideas and apply them to architecture? What is immutable architecture?

Michael Perry: Starting within the context of functional programming, we use immutability to reason about how a program behaves, and that helps us with things like concurrency so that we can tell that this is the value. There's nothing else that's changing it that I can't see that's within the scope of the function that I'm looking at. Now, when we take that outside of a single process or some memory, and now apply that to how distributed systems behave, now we're talking about persistent storage, we're talking about messages on the wire, and if we don't have the tools that we need in order to reason about the behavior of that distributed system, then it's going to be really difficult to achieve things that we really want to be able to achieve.

Things like, if I were to ask two different nodes within a cluster the same question, am I going to get the same answer? That's a guarantee that we call consistency and it's really important. So we want to be able to reason about when am I going to be able to achieve consistency and what does it really mean to be consistent? When it comes to modeling problems, often it's just as important to know how a system got to be in a particular state, as it is to know what that particular state is. So keeping track of that history is sometimes really important. And history by its very nature is immutable. Once a thing happens, it has happened. You can write down that historical fact and it will never change. So I think bringing immutability back into our tool set in order to solve distributed problems is really handy and really helpful.

Charles Humble: We should maybe clear up some misconceptions that listeners might have, and one that sprung to mind for me was the difference between immutable architecture and immutable infrastructure, because immutable infrastructure's obviously a term that's been used quite extensively in the last few years. Can you give us a definition of what that is and how that compares?

Michael Perry: Immutable infrastructure is the practice of describing the virtual machines, typically, and all of the services, the networks that they are connected to, in a way that you say this is the state, and I'm not going to go in and update the state of that virtual machine or update the state of that network configuration. Instead, if I want to deploy a new state, I'm going to deploy it in parallel, transfer all the traffic and then tear down the original one. So it's this typical idea of treating cloud resources as commodities, rather than as something that you name and you care for.

Charles Humble: And the other thing we should perhaps touch on, because it might be a reasonable assumption to make if you haven't read the book and you aren't familiar with the ideas, is that when we talk about immutable architecture, we're not talking about architecture that can't change, because that would self-evidently be a terrible idea.

Michael Perry: Yes, that would be very bad. Instead of we're talking about taking the idea of immutability and making that central to your software architecture, and then how you deploy it, you could deploy it on an immutable infrastructure if you choose to. That is a completely separate and independent problem.

Can you give us a quick refresher on CAP theorem? [06:24]

Charles Humble: Now Eric Brewer's CAP theorem underpins a lot of the thinking in the book and a lot of what we're going to be talking about for the rest of this interview. So again, could you maybe give listeners a bit of a refresher on that if they've maybe forgotten some of the details since they last looked at it?

Michael Perry: So this is Eric Brewer's conjecture that given consistency, availability, and partition tolerance, which are three properties that you want in a distributed system, it's only possible to simultaneously achieve two of them. So consistency is what I just described. If you ask two different nodes the same question, you get the same answer. Availability is that you will get an answer within a reasonable period of time. And partition tolerance is that the system will continue to uphold those problems even if the nodes can't talk to each other, there's a partition within the network. So CAP theorem says you can only choose two.

Can you take us through the two generals' problem? [07:12]

Charles Humble: You mentioned the consistency problem a couple of times, and in the book you use the two generals problem as an illustration of that, which I really enjoyed. Can you maybe give a brief summary of that as well for listeners who aren't familiar with it?

Michael Perry: This is an illustration of consistency that goes back a ways. I can't claim authorship for it myself, but I found it to be really fascinating. The idea, it goes that you've got a couple of generals that are leading armies outside of a besieged city, and they have to decide when they're actually going to attack. So they've been cutting off the supply lines and they are sending scouts to figure out, is the city weak enough now to attack? If only one of the armies attacks, then the attack will fail. They must both attack at the same time. So they need to agree that they're either going to attack tomorrow or they are not. So your job is to come up with a protocol by which you can guarantee that if one of the armies agrees that it's time to attack tomorrow, that they know they have a guarantee that the other army has also agreed.

So you could think about this as, "Okay, I'm going to send a scout or I'm going to send a messenger over to the other general, and then that messenger is going to say, 'We're going to attack tomorrow,' and then I've got a guarantee." Well, no you don't. You don't know that that messenger has actually made it to the other camp. They might've been captured. So maybe add to your protocol a response. So I send a messenger that says we're going to attack tomorrow, and I expect a response, and then I don't attack until I receive the response. Well now can the other army know that they're going to attack because they're just going to be sending a response message and they have no idea whether it made it through. So you continue this reasoning and you eventually end up at a place where you realize there is no protocol that guarantees that you're going to reach consistency at a particular point in time.

Charles Humble: And really the only way you can get round that, as I understand it, is by basically relaxing one constraint or another.

Michael Perry: Yes, exactly. So if you relax the constraint that, "Okay, we're going to attack tomorrow." Now there's no deadline. You can continue the protocol as long as you need to. And then you'll also relax the constraints that you won't attack unless you have a guarantee from the other side, because that's the crux of the problem. You can't get that guarantee. So instead, we'll say we'll continue sending messages until we both know that this decision has been made. So under those relaxed constraints, you can find the solution, and the solution is actually pretty simple. And those relaxed constraints actually map to the concept of eventual consistency. So this gives us a way that we can illustrate the trade-off between strong consistency, as required by the CAP theorem, and eventual consistency with some relaxed constraints.

Eventual Consistency in the real-world [09:53]

Charles Humble: So trying to make this a little bit more concrete, perhaps, very early on in my career, so in the late 1990s I think, I was working on a system which was basically an early internet banking app. So it was putting a web front-end to some things that were green screen mainframes in the background. And one of the things that I worked on was a screen that would display a balance to a customer, which sounds like it would be a really trivial problem, and it turned out to be almost impossible because you had pending transactions, the various mainframes would sync up maybe once a day for a batch job, except that sometimes the batch job didn't run. You might have transactions from the cash machine network or from credit card machines and those sorts of things which hadn't come in or which we knew were going to come in, but they were still being held somewhere and so on.

I don't think I knew the term eventual consistency at the time, but that's basically what we were dealing with. So a lot of that project was spent having conversations with the business that would be, "What is an acceptable level of accuracy, because we can't be 100%. So how do we get to a point where we can all agree, 'Yes, this is okay.'" And that seems to me to be sort of illustrative of what we're talking about.

Michael Perry: Yes, that's a really good real-world example of exactly what we're talking about. So one might be led to believe that, "Okay, so since the two generals problem says that strong consistency is impossible if you could have a network partition without giving up availability," then you might think, "Well, okay, things like ATMs couldn't possibly work, but yet they do. So how do we solve this problem?" We solve the problem by putting in those relaxed constraints within the business domain. So yes, we'll allow a customer to withdraw some money, even if we can't guarantee that their balance is above $20, for example. We'll still allow that transaction, knowing that we've got some compensation from the problem domain itself.

Charles Humble: The thing there is I might be able to withdraw from one cash machine and then run to another cash machine and make another withdrawal if I'm quick enough and lucky in my timing, and I'll end up overdrawn even if my account doesn't allow it, but if the business has said, "Well that's fine," then that's okay.

Michael Perry: Yes. And if they can turn that into a revenue stream, then all the better.

What is the purpose of location independent identity? [12:03]

Charles Humble: Absolutely, yes. We'll charge you 20 pounds for sending you the latter or whatever it is. So in one chapter in the book you talk about location independent identity. And again, I just found that really interesting. So what is the purpose of that?

Michael Perry: I think this is one of the cornerstones to making this kind of architecture work. Typically if we are using a relational database, we'll have an auto incrementing ID as one of the columns. So we'll use that ID that's generated on insert to be the identity of the record that we're storing. That identity is only good within that particular database cluster. So it's tied to a specific location. You can think of several different scenarios in which the same entity, the same object would have a different identity in a different location. If we were storing an offline backup, we might be just executing the same SQL statements against it, but then generating different IDs. Or we might have two different replicas that are both accepting writes, so they both might allocate the same ID, but two different objects.

So the idea of using an identity that is tied to a location is one of the things that causes us trouble. It gets us into a bad situation. So what I explore in the book is a set of identities that we could choose that are location independent. They don't care about where the data is stored. One great example is a hash. So you take the value that you're trying to store, say it's an image, a photograph, and you will compute a SHA-512 hash. That hash is going to be the same no matter who computes it. So if you use that as the identity of the photograph rather than a file name, for example, then that identity is going to be location independent. So by using location independent identities, you can have different machines within a distributed system talking to each other about objects and knowing that they're talking about the same thing.

Charles Humble: And given that location independence is a property of immutable architecture, how do you then guarantee that two nodes will eventually arrive at the same conclusion?

Michael Perry: That where some really good math papers come into play. So if you have a system that is based on collecting immutable records, you have to still have some guarantees about the records that you collect in order to make sure that everybody's going to interpret them in the same way. So one of those guarantees has to do with the order of those events, the order of those messages. If you must play all of the messages in the same order in order for two nodes to achieve the same outcome, then those messages are not what we call commutative with one another, just like the commutative property in algebra, A plus B equals B plus A. So if we can guarantee that if I receive messages in a different order than you do, we still compute the same outcome, then we can say that our message handler i s being communicative.

So the way that we can now take that and apply that to a distributed system is if we come up with an operation that like plus is a commutative operation and only use that operation in order to compute current state, then now we can build our distributed systems on top of that operation and have that guarantee given to us for free. So the operation that I like to use is set union. So if I receive two different objects and I put them into sets and then I union those sets together, I'll get out a set that has those two objects in it, and somebody else could receive them in a different order, union them, and they'll still get the same set, because sets don't know the order in which things appear in the set. So now if you can build your entire system based on the operation of set union, then you're really getting somewhere. You can have some strong guarantees about eventually reaching the same state.

Could you give us an example or two of where some of the ideas in the book have been used in real-world application? [15:38]

Charles Humble: And then to make this a little bit more concrete, could you give us an example or two of where some of the ideas in the book have been used in real-world applications, maybe applications that you've worked on?

Michael Perry: For example, a system that I worked on a few years back was for law enforcement. So we had ifferent changes to a case that were occurring over time. So you might think, "Okay, change to a case. You're talking about something that's mutating the case." But what we're actually collecting are the changes themselves, and those changes are immutable. So the change said, "Okay, this is the case that we're talking about, so now identify the case and do that in a location independent way so that everybody knows which one you're talking about. And then who made that change? And most importantly, what information did they have at the time they made the change?" So now that has to be approved by an administrator at some point in the future. So since this is law enforcement information and information about active cases, we have to know exactly where the information came from. And we have to know that it was verified.

So while that information is being verified, other law enforcement officers are seeing the case without that new information in there. So they can make their own edits, which produce new immutable facts about changes to the case. So you might end up with two different officers making a change to the same part of the case, and neither one of them has been approved yet. So neither one knew about the other one. So if you were to put that in a directed acyclic graph, now you would have a graph in which those two nodes don't have references to one another. They didn't know that the other one existed. And now you take the set union of that set of notes, and you'll end up with a graph that has two leaves. It has two places where you could say, "This is the terminus of that graph."

So now seeing that shape, the administrator can see, "Oh, okay, there's been a concurrent edit here," and they can choose to approve one, reject the other, or they can choose to merge those two pieces of information themselves, thus producing a third edit fact that now has causal relationship with the prior two. So it points back to those other two, creating a graph that now has one leaf node. So that's a real world example of how we brought the idea of immutability, how we brought the idea of set union into the problem domain, and that was there not to solve any problem with distributed systems, but just in order to solve the actual domain problem.

Charles Humble: And then in the book you also used the examples of Git and Docker and also blockchain as examples of systems that work in similar ways, that exhibit some of these same characteristics of being immutable architecture systems. So could you maybe take one of those and use that as a way of kind of expanding on some of these ideas for us?

Michael Perry: In fact, the example I just talked about with the law enforcement application, that's actually very similar to a problem that we solve in software all the time when we're working in teams, is that we're making changes to source code without knowledge of the changes that other people are making concurrently. They might not have gone through the pull request process, so they're not part of the main branch, so they're not a predecessor of the work that we're currently doing. But we're able to record that in our version control system, because we have commits that have parent commits. So a commit is an immutable record that points back to a previous record in history that says, "Okay, this new fact was created with knowledge of the previous fact and based on that previous fact."

So there's another example of where we're using immutability, where we're using this idea of merging with the directed acyclic graphs in order to solve a real problem. And this is one that we're, as developers, a bit more familiar with. And as a matter of fact, it also solves the distributed systems problem because I can work offline. I can have my machine disconnected from the remote and I can still be very productive, and I know that when I do come back online that I will eventually become consistent with the remote and with the other members of my team.

How do CRDTs fit into the picture? [19:36]

Charles Humble: Moving to think a little bit about datatypes, you talk about CRDTs in the book, which is a topic that my InfoQ colleague Wes Reisz talked to Peter Bourgon about on an episode of the podcast last year. So I'll link to that in the show notes. But just to help tie all of us together, could you talk about CRDTs and how they fit into the story?

Michael Perry: A conflict-free replicated data type, quite a mouthful. But it is a kind of data type, so you might think of linked list, you might think of directed acyclic graph. So this is a set of state with operations data type, and the operations that it performs internally are just things like updates or a merge. So what do those two things mean? Update is an operation that will perform in order to record some new information within the CRDT, and merge is what will perform when we learn about some other system's replica of that CRDT. So if we are working on one node and the user is interacting and then they push a button and they want to execute an action, we're going to perform an update on our replica of the CRDT. And then when we talk to the server or we talk to a peer, that's where we're going to merge with others.

So a CRDT is just a general set of rules for how we can make guarantees about that data structure in order to make sure that it eventually reaches a consistent state across all of the various replicas. And CRDTs are really exciting because they have a consistency guarantee that's even better than eventual consistency. It's called strong eventual consistency. And this is a guarantee that the CRDTs will reach a consistent state after they have all received the same updates, not necessarily when they have all had a chance to talk with one another and achieve consensus, like for example, using the Paxos algorithm. So as soon as all nodes have achieved all the updates, they are in the same state. That's a pretty strong guarantee with relation to eventual consistency, and one that I find quite useful to base all my work on.

How are CRDTs used in Google Docs? [21:31]

Charles Humble: And CRDTs have been used in some very major well-known applications. I guess the most famous is probably Google Docs. So they have a particular CRDT data type, they call it Treedoc, and Treedoc is used to handle the synchronization that happens when multiple users are editing the same document. So could you talk about that and how that compares to your own work in the field?

Michael Perry: Yes, what I would say is things like Treedoc are very specific CRDTs that were designed to solve a specific problem. So Treedoc solves the problem of collaborative editing of a document, and collaborative could be real time, like we see in Google Docs, it could be offline and supporting a merge once the docs come online. And the eventual consistency guarantee that they offer is that eventually everybody will be seeing the same text on their screen. You won't get some that are seeing one paragraph before the other and some that are seeing it in the opposite order. So Treedoc and other CRDTs were developed for their specific problem. My goal was to develop a CRDT that was more general purpose. So the one that I came up with was where the merge operation was a set union, and the state that it talked about was a directed acyclic graph. So if you can perform a set union of two directed acyclic graphs, then you can guarantee that any two nodes that perform those operations will achieve the same graph.

Now there's one other aspect of a CRDT that I didn't mention before. And that is that there is a projection function. There's a way that you can take that interstate and projected out to something that the application can see. So in the case of Treedoc, the projection function gives you a screen full of text, but the internal state is not just that text. There's not enough information in just the text to be able to guarantee that you can merge it. So likewise, in this application of using directed acyclic graphs, the projection function is how do you interrogate a graph in order to figure out the current state of an application? And there might be many graphs that give you the same current state, because information is kind of hidden from the user as they perform that operation. So people can't see the entire history of everything that's happened. They can only see what the final outcome is.

So if you, as an application developer, can give me a set of historical facts that I can organize in a directed acyclic graph, and you give me a projection function that tells me how I can display this directed acyclic graph to your user, then that's all I need, and I can solve the problems of strong eventual consistency, I can synchronize data between nodes, I can work offline, I can store things that are happening offline and then queue them up to be sent to the server later. So you get all of these capabilities out of the box by describing the problem in a different way.

What would you say are the primary benefits of taking this approach to building distributed systems? [24:15]

Charles Humble: Stepping up a level, what would you say are the primary benefits of taking this approach to building distributed systems?

Michael Perry: The primary benefit, I think, is that you can decouple the idea of thinking about the topology of your system from thinking about its behavior and its data. A lot of times when we're building an application, we are conflating all the ideas of how do I store this to a file, how do I design messages, how do I subscribe to queues, and all of these topological ideas, and we're completing those with the business problem. So how do I design a message that represents this particular action within the business? So you end up having to solve the same problems in distributed systems for every single application that you build. So if you can decouple those and you can say, "Okay, at this point, I'm trying to solve a distributed systems problems, but now I'm thinking about topology, and now at that point only thinking about the problem domain," then it's a lot easier for you to reason about the problem domain and know what you can and cannot accomplish, making that a bit more concrete.

And one of the problems that you might have in your problem domain is that you want to reserve a room for a particular time. So only one meeting can happen in that room at that time. So that is a difficult problem to solve within that problem domain. So you might solve that using topology by saying, "Okay, there is one machine that makes that decision, and if I get another request to reserve that room, that machine will have locked to that record and rejected that second request." So the idea of having a topological bottleneck, of having locks, that is one part of your problem. And then the desire to reserve rooms is another part of your problem.

So what you can do by taking these concepts of immutable architecture and saying what guarantees do they allow me to make, and what guarantees do they not allow me to make? You can reason through that and realize that there is no general solution to the reserve a room and guarantee that only one person has reserved that room for that particular time. So that's the point where you can say, "Okay, at this particular point, I need to bring in a topological solution just to solve that one problem." And then everything else that doesn't rely upon those types of strong constraints, and everything else that doesn't rely upon those strong constraints can be solved using more general methods.

How might you start to introduce an immutable approach to an enterprise application? [26:39]

Charles Humble: Now if I decide to adopt this approach in typical large enterprise application, the challenge that I foresee is that I am going to be talking to downstream systems maybe that I don't have control over, which are not going to be following the kind of immutable approach that you advocate. So I was curious as to how I might manage that. You mentioned, for instance, the outbox pattern in the book, and obviously that's one way that we could introduce these concepts into our typical enterprise setting. So maybe you could talk about that more generally, just how would you advise listeners adopt these practices if they feel they're appropriate for their particular problem?

Michael Perry: That always is the most difficult problem. So one of the solutions, the outbox pattern, which you just mentioned is a way that I can take things that I learn about new immutable facts within my immutable architecture, and I can talk to an external system that is not following the principles of immutability. So one of the side effects of not following the principles of immutability is that this external system might not be idempotent. That's a fancy math word that just means that if I were to send it the same message twice, it might duplicate the effect. So if I'm using an HTTP POST, and then it's giving me back the URL of the thing it just posted, if I didn't receive the first one, I might try to POST again, it might insert a new record, generate a new ID, and then give me back a new URL, so it's just duplicated the effort.

So the outbox pattern is one way that we can not guarantee that we solve the idempotent problem, but instead just minimize the probability that it happens. So this is an idea of, first of all, introducing a topological bottleneck within our system. So that's just a way of saying we're only going to have one machine talking to that API. We're not going to try to talk to the API concurrently from different machines. And then secondly, that one machine is going to keep a journal of everything that is sent to that third-party. So now that journal can link the API requests back to the facts within the immutable system, whereas before it might be that I have a need to call this API, let me call the API, check back again, I still have the need to call the API. Let me call the API. I might not know if that first call is still waiting. So this journal at least helps us to keep track of that.

It doesn't let us keep track of the fact of whether the message made it to the other side or not, so we still have that possibility of they heard the first one, but then we timed out and then they got a duplicate message. It at least minimizes the window a bit. So that's one of the patterns that are talked about in the book about how you can integrate with third-party systems that are not yet following immutability practices, but hopefully, after a lot of people have read the book, then that will no longer be a problem and everything will be immutable and it'll be a wonderful world.

Charles Humble: Let's hope so. So if listeners want to find out more, where would you suggest that they go next?

Michael Perry: The place to start is immutablearchitecture.com. That's a site where you can find links to purchase the book either directly from Apress or from Amazon, and you can also find ways to contact me about learning about immutable architecture and ways that you can join a book study with me.

Charles Humble: Michael Perry, thank you very much indeed for taking the time to join me this week on the InfoQ Podcast.