Wesley Reisz talks to Oren Eini about the history of RavenDB. RavenDB is a fully transactional NoSQL Document database that implements both CP and AP guarantees at different times. During the conversation, the two discuss those CP/AP distributed systems challenges, the choice of implementation language (C#), and the current plans for RavenDB 6.0, which includes a server-side sharding implementation.

Key Takeaways

- There is the notion of cluster-wide and node-local transactions in RavenDB. With RavenDB, the cluster layer is considered CP (it's always consistent but may not be available in the presence of a partition). While the database layer (or node-local scoped transactions) is considered AP (always available, even in the presence of a partition; however, those transactions are eventually consistent).

- C# is the implementation language for RavenDB because of the community around dotnet, and the language's ability to enable developers to write low-level and high-level code. C# offers lower-level systems programming to control memory, CPU, and I/O while still offering a managed runtime when wanting to work at higher levels of abstraction.

- RavenDB previously removed the client-side implementation of sharding. The 6.0 release of RavenDB is working on a new implementation of sharing focused on a server-side implementation.

Subscribe on:

Transcript

Introduction and welcome [00:05]

Wes Reisz: CAP Theorem, also called Brewer's Theorem, is often cited when discussing availability guarantees for distributed systems (or a system of compute interconnected by a network). It basically says that if you have, say a database that runs on two or more computers, you can have linearizability (a specific consistency requirement which, I'll just say, constrains what outputs are possible when an object is accessed by multiple processes concurrently) or availability, which means you can always get a response.

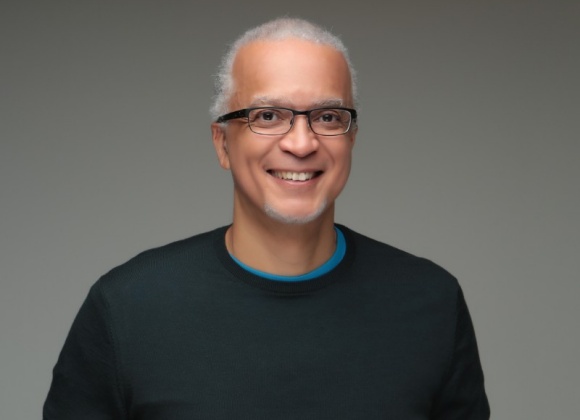

You may have heard CAP Theorem described as consistency availability or partition tolerance, pick two and by the way, you have to pick partition tolerance. Today on the podcast we're speaking with Oren Eini, CEO of Hibernating Rhinos and creator of RavenDB. RavenDB is an open source, NoSQL document database that is fully transactional. RavenDB includes a distributed database cluster, automatic indexing, easy to use GUI, top of line security, in memory performance for persistent database.

Today, we're going to be discussing the backstory of RavenDB. We're going to discuss how Raven thinks about the CAP Theorem, spoiler alert, it thinks about it on two different levels, a bit about the choice of the implementation language, C#, and finally index management with Raven. As always, thank you for joining me on your jogs, walks and commutes. Oren, welcome to the podcast.

Oren Ein: Thank you for having me.

Where does the name Hibernating Rhinos come from? [01:22]

Wes Reisz: So the very first question I have is right behind you on the screen. The people that are listening can't see your screen, but it says Hibernating Rhinos, the name of the company behind RavenDB. Where does that come from?

Oren Eini: It's a joke. It's an old joke. So around 2004, (18 years ago), I got started on the NHibernate project, which is a port of the Hibernate ORM to dotnet. I was very heavily involved on that for a long time. I also was involved in several open-source projects and my tagline for most of those projects was rhino something. Rhino Mod, Rhino Service Bus, Rhino DistributedHashTable, et cetera. So when I needed a name, I just smashed those things together and Hibernating Rhinos came out, and it was hilariously funny to me at the time. Just to be clear, I'm living in Israel, which means that every time that I need to go to the store and buy a pen, and then they ask me, "Who do you want the invoice for?" I have to tell them, "Hibernating Rhinos." Then I have to tell them how to spell that in Hebrew. So right now I would've really wished I would call the company "One, Two, Three," or something like that. If only for that reason.

Wes Reisz: Now you're going back to my early days. I came up as a Java developer and then I transitioned into doing C# for some different companies and for some different roles that I was in. So I remember NHibernate real well, when I was trying to grasp for similar tools that I was familiar with in the Java world, to bring in to the C# world. So you definitely triggered some memories for me.

Oren Ein: Triggered, is the right word I think.

What's the problem that RavenDB is solving? [02:57]

Wes Reisz: It triggered. That's a good word, huh? So in the introduction, I mentioned that Raven is a fully transactional, NoSQL document, go deeper than that. What's the problem that RavenDB is solving?

Oren Eini: Go to 2006-7, there about. At the time, I was very bigger than NHibernate community. I was going from customer to customer, building business applications and helping them meet their performance, reliability, all of the usual things that they want to have. At the time, the most popular databases I worked with, were SQL Server MySQL, Oracle sometimes, stuff like that and every single customer run into problems. Now I was working with NHibernate, I had a very deep level of knowledge about how to make NHibernate do things. I was also the committee, so I could make change the code base and have them there. It felt like this never ending journey of trying to make things work properly. At some point, I realized that the problem wasn't with the tooling that I had, the problem was the actual issue that I was trying to solve. Also, remember this time domain-driven design was all the rage and everyone wanted to build a proper model and domain for their system and built things properly.

But there was this huge impedance mismatch between how you present your data in memory, storing type objects, lot of interconnection and complex things. Then you have to hydrate that to a database and, oh my God, you have 17 tables for a single object. If you wanted to have a complex object graph with polymorphism and queries and things like that. Where you have a discriminator table, you have a union table, you have partial table inheritance. Also, we are in a podcast, but people, if you could see his face right now, he's like trigger, trigger, trigger all over the place. The problem was you couldn't do that. The database that he used that actually fought you in order to do that. You had options of basically building transaction scripts and even if you wanted to build a transaction script, which is basically, all read and write to database, we're building such complicated applications today and 15-20 years ago was the same in many respects. That the amount of back and forth that you had to go with the application database was huge.

So I started looking into other options and I run into NoSQL databases at the time and it was amazing, because it broke an assumption in my head that the relational model is how you store data for persistence. So I started looking into all of those non-relational databases and at the time there were many of them. In 2007 or there about came the Dynamo Paper from Amazon, which had a huge impact on the database scene. There were all sorts of projects, such as Riak and Project Voldemort, much further stuff like that. MongoDB and CouchDB at the time and all of them gave you a much better system to deal with a lot of those issues.

However, if you actually looked into what you're giving up, it was horrendous. NoSQL databases typically did not have transactions, which is nice if you are building a system that can throw data away. MongoDB famously was meant to store logs and okay, we lose one or two entry once in a while. Ah, no big deal, that all wasn't reason it was meant to be. So transactions are something that happened to someone else, but then you realize that, okay, I want to build a business application and I actually care about my data. I cannot accept no transactions. Some databases said, "You can get transactions, if you write this horribly complicated terms in order to do that." Which still isn't actually safe, but pretends to be so. So I started thinking, I want to have a database that would be transaction, would give me all of the usual benefits that I get from a relational database, but also give me the proper modeling that I want.

Wes Reisz: What did that first MVP look like? So you set out with this goal, what came out?

Oren Ein: So the first version was basically, there is a storage engine that comes with Windows that is called ESENT. That was recently open sourced, recently as in two, three years ago. This is a dealer that comes with every version of Windows, that implements an ACID and ISAM, index sequential access method. This is a very old terminology and I'm talking about the 70s, 60s even, but it is an amazing technology for many respects. I took that as the ACID layer that I had, I plugged in Lucene for indexing, mixed them together with fairly permitave UI at that point. I then created the client API that mimic to a large extent, the API, NHibernate and other Oms. That give you the ability to operate on actual domain objects and persist them in a transactional manner with all of the usual things, such as exchange, tracking, all of those. On top of that, you had a full text search, you had the ability to create the data in any arbitrary way and the database would just respond to you.

Wes Reisz: So fast forward 15 years, 16 years from where that MVP releases. When you look at the NoSQL landscape, it is definitely crowded, but there's also a lot of overlap. So where does RavenDB fit into the landscape, next to things like Cassandra and MongoDB, just in general? Where does RavenDB excel and how does it differentiate itself?

Oren Eini: RavenDB is a document database, it stores documents in JSON format. In addition to JSON documents, you can also add additional data to a document, the data can be attachments. So some binary data of arbitrary size and we like to think about it like attachments on an email, which turned up being a really useful feature, you can store distributed counters. This is very similar to the Cassandra feature, where you may have high throughput counter that is been modified in many nodes at the same time and converts to the right value. Again, this is a value that you can attach to a document. So a great example, if you have an Instagram post and you want to count the number of flags. So that's something very easy to do with this sort of feature. We also have revision support, so you can have automatic audit trail of all of the changes that happen to a document.

Finally, we have the notion of time sequence, we can attach a value that modify over time to a document. The idea here, for example, I have a smartwatch, so my heart rate is being tracked over time and I can add that to RavenDB. The idea here is that each one of those four, five different aspects of a document have their own dedicated and optimized system, that is meant to make it easy for you to implement all sorts of features. But going back to documents, a document is a JSON object that can be of arbitrary size. Practically speaking, RavenDB can accept a document up to about two gigabyte in size. Do not go that way. Other important features of RavenDB, you have a query language, we call it RQL and just very similar to SQL, except the select is in the end basically. You can also issue commands, such as patches, or transformation that happen on the server side. You can have a transaction that would modify multiple documents from the same collection, from different collection all at once. You have transactions that are either not local or cluster-wide.

How can RavenDB claim to be both an AP and CP system? [10:59]

Wes Reisz: I want to switch over and talk about the CAP Theorem consistency availability guarantee. A lot of times when we talk about databases, distributed systems quickly CAP Theorem comes up. Again, as I said, in the intro, you can choose between consistency or linearized ability, availability and networking, or network partitions and by the way, you always have to choose network partitions. So you can be AP, you can be CP. However, if you read the documentation about RavenDB, you say, "We're both." How is that possible?

Oren Eini: The question is when? Now we try to explain, CAP Theorem only applies when you have an actual failure. That's important. So if you can be consistently available, as long as there is no failure in place. The problem is that you have to assume that at some point failures will happen. So the question is, how do we handle both the fail scenario and the success scenario? It turns out that this is really how to answer globally for all of your operations. So with RavenDB, you have two different levels that you can choose. There is the notion of a node-local transaction and there is the notion of a cluster-wide transaction. Now cluster-wide transactions require you to go to a majority of the nodes and this is a standard distributed consensus system, where we are a consistent with partition tolerance and that's great for a certain set of operations that you have.

Wes Reisz: Specifically, that's Raft, right? When it comes to Raven, Raft is-

Oren Eini: Correct. By the way, it's funny, but I think that at this point there is Raft, Paxos and Viewstamped Replication. All of them, if you look deeply enough, are the same thing.

Wes Reisz: Just complexity is drastically different and how it Paxos acts versus Raft.

Oren Eini: Oh dear God. Just very small rant about Paxos. I have read all of the papers of Paxos. I went through all of the lead Paxos implementation and a bunch of other implementation. I have written a Paxos implementation that works. I have sold that and used that commercially and to this point, and I'm talking about 17 years of distributed programming experience. I have no sense of how this works.

Wes Reisz: Nice. You make the rest of us feel good about it too.

Oren Eini: Then I read the Raft paper and it was like this show of angel singing, I get it. There are details and Raft isn't trouble to implement. There are all sorts of pitfalls that you have to be aware of, but I got it. I understood the concept of how this is supposed to work, I could reason about it.

Wes Reisz: You're the first person to make that comment on the podcast to me.

Oren Eini: Because that was what it was designed for, to be understandable. So we use Raft for distributed consensus and that's good for high value things. But the problem with distributed consensus, that you have to apply that every single time. Now in a cluster, that means that in order to accept the decision, you have to make at least two network round trips. If you are working on a transaction system, you have to pay the cost of multiple FSync's to the disc and that can dramatically increase the latency that you have for the happy part. I'm not talking about the fail no more, just the happy part, you have a higher latency involved. Now there are ways of trying to reduce that and optimize, but in the end of the day, you have to do network round trips and FSync's to the disk and those things cost a lot.

So RavenDB has this notion of node-local transaction and the idea here is that in many cases, you just want to store the data and if there is a failure, we can recover afterward. In the case of RavenDB, there are two layers to the distributed nature of RavenDB. At the bottom layer, you have database nodes that are running, using a gossip protocol and a node-local transaction would write the data to one of them, and then replicate it to the rest of the nodes in the cluster. At the cluster level, you can have a cluster way transaction, that would apply a transaction to all of the nodes in the cluster. So those are the two levels that you have.

How does latency/slowness affect different failure scenarios with RavenDB? [15:08]

Wes Reisz: One of the critiques of CAP Theorem, I'm thinking specifically Martin Kleppmann, his paper, I think 2015 or so, it's called a critique of the CAP Theorem and you referred to it a little while ago. It talks about failure modes, it's complete failure for network partition, but he talks about things like slowness or just latency in the system. In this two-level design that you have, how does something like latency or slowness affect the different failure modes with RavenDB?

Oren Eini: Depending on how you want to manage that. So let's talk about failure modes for a second. I write to RavenDB a set of documents and I want them to be stored. By default, I would use the node-local transaction, which means that I get to the node, it persisted to the disk.

Wes Reisz: So real quick. When you say a local node transaction, is that a specific operation that's doing it, or are you just writing to RavenDB that happens to be a local node operation?

Oren Eini: So you have a transaction, you have a session, you modify things, then you of course have changes. That batch, all of the changes that happen in the transaction is sent to RavenDB in one shot. That batch of operations is going to be a transaction and that's going to be persisted. You have the options of selecting that transaction to be a cluster-wide or not local transaction. If this is a node-local transaction, then that node will tell you, "Okay. I'm done." Once it flashed the data to disk, once it was made doable and that's it. If you ask it to be a cluster by transaction, then it has to get consensus, that this has been persisted to disk on majority of the nodes in the cluster. So this is where you have the latency versus a consistency issue.

Wes Reisz: But just to that point, as the developer writing it, as the person interacting with it, you do have to be thinking about where you want this data persisted, cluster-wide or local. You need to make that-

Oren Eini: No. In all cases, the data would be persisted cluster-wide. The issue is, what is the consistency guarantees that you are requiring for that? I know what is the value that is involved, so I can make that determination on a case by case basis.

Wes Reisz: I got it. So you're as the person writing the code, executing the use case, or making the decision, what's my trade off, but you're making that one. Right. Got it.

Oren Ein: Another thing that is important, is that what happened if the node that I'm trying to write to has failed? Then RavenDB would transparently redirect the transaction to another node. So a node fail is just something that happens and you typically don't notice.

RavenDB is implemented in C#. What were the design decisions around that choice? [17:38]

Wes Reisz: There's another question that I wanted to ask you about RavenDB. One of the things that stood out to me as I was diving in, looking into it, is the code base is in C#. What's the design thinking around C#, as opposed to some of the other system languages that you might use today, say something like Rust?

Oren Eini: I want to touch a little bit on Rust because it has a huge hype and I wrote not insignificant amount of code in Rust. I considered myself to be an okay developer, programmer, know quite a few languages, have enough experience to say that I've been around the block and Rust is super annoying. You have to continuously make the compiler happy. Now I spent a few weeks, two months, separated by a couple of years trying to do things and it just was really annoying to get things done. Conversely, in C, I swim, because there is no mental overhead, then I crash because of invalid memory exception or something like that. Here's the issue. Go into Rust and try to implement a binary tree, or a tree, or any interesting data structure, which require you to modify memory. You realize that this starts as impossible and as you learn more, you become only slightly, extremely hard. But at this specific, Rust, because I find it hard to communicate language. Going back to why C#.

Wes Reisz: Just real quick on that though, the Rust community would say, the constructs, like ownership within the language, allow you to be able to do things like you're talking about, more safely than in places like C++. So they'd argue that it's needed complexity.

Oren Eini: Let me give you a great example. As a database, I deal with pages. I have a page that I get from the buffer manager and this is a mutable page, because I'm going to write to that and I need to update the header and I need to update the content of the page. The easiest way for me to manage that in C, for example, is to say, "Okay. Let me cast the page pointer to the head and modify that. Let me cast the data portion of the page to a data objects array and manage that." I try to do that with Rust and no, you cannot have mutable references set in, but that's what I actually want, because I have this piece of memory that I arbitrarily decided it needs to be muted in multiple ways.

The typical way you would resolve that in Rust would be, oh, just use a box or any of those sort of things. But in this case, I'm looking at the raw bytes that I'm working on. So we have to jump through multiple ops to do that, or you have to copy the data, mutate it and then copy it back, which is, I want to do a zero-copy system. I actually had the challenge on doing a trie with Rust, but the challenge was, here is 32KB of memory, this is the only thing that you are allowed to use. This is your buffer and implement it further and people did it in Rust, which in a way that was completely unintuitive and broken.

Wes Reisz: All right. So C#, so don't like Rust.

Oren Eini: On the other hand, by the way, Zig is an amazing language, we love it. Zig is a very small, it competes with C and C++, but it gives me all of the tools that I want to build system level software, it gets off my way and it's nice.

Wes Reisz: But you still have C#?

Oren Eini: I still have C#, yes, and I will explain why. C# is an interesting language, because go back to 2000, when it came out. And realize that one of the things that made C# distinct from Java, was that Microsoft was blocked from adding extension to Java that made it work well on Windows. Things like being able to easily call Win32 APIs, being able to integrate well with Chrome object or sort of things. When Microsoft was forced to go with the .NET and the CLR, then they had a really interesting design. For example, you had value types from day one, you had the ability to allocate memory off the heap from day one, because all of your existing infrastructure that you would integrate with required that. So calling to native code was trivial. That also meant that to a very large extent, the language was already geared to give you multiple level in which you can work with.

I'm going back to C# 1.0, but going back to C# in 2015, at that timeframe we had reached a decision point for RavenDB. I built the architecture for RavenDB, the first two versions, when I knew not that much about databases and we learned a lot in that timeframe. We couldn't push that architecture any longer and we had all of the classic issues with managed systems. So high GC utilization, inability to control what's going on in many cases, stuff like that and I sat down and wrote a design document for how we want to do things.

One of the things that were quite obvious to me, is that I have to take ownership on all of the big resources that I have, so CPU, I/O and memory and we looked into doing things in C++ or C at the time and that was okay, let's start walking bare foot to Beijing basically. I can do that, there is the lane path from here to Beijing, but that's probably not something that I want to do. Because, okay, where is my hashtable or how do I do things like a concurrent data structures? You can do that, but this is this huge complicated topic.

So we looked into, can we control the resources that we want in C#? And it turns out that you can do that, you can allocate native memory, you can control your allocations. There are well-known patterns for object poolings, for off heap allocations, for build your own thread pool. So you can have fine grain control over where and how you execute and here's a great example. In RavenDB, which is a C# application, we have our own string type, it's insane. I mean, the classic example about bad C++ code bases, is that they have three different string types.

Well, it turns out that for RavenDB, we deal a lot with JSON obviously and one of the interesting things about that is, that we did a performance analysis of what's costing us in terms of CPU utilization. It turns out that 45% of our time was spent in one method, writeEscapedString(), because wherever we wanted to write a string to the network or anywhere else, we would have to check if this JSON string had an escape character in it and then escape it. Now we write a lot of JSON strings. So what we did was, okay, let's read and write RavenDB, typically skewed heavily toward reads. So when we read a document from the network, we stored it internally in a format that tells us where the escape positions are.

So in most cases, when I write it, I can just send the full block directly to the network without having to do any processing. We also implemented our own JSON document type and the reason for that, was that I can now have a system that is zero copy. If you think about it, if you have a JSON string and you have, let's say a 100KB of a JSON string, I want you give me the name of the third person. You have two options, you can parse that into an immutable object and then just process the DOM. Or you can try to do a sequential scan and hope that you would find the result before the end, both of which are incredibly expensive.

But there is another thing that you have to consider here. If you parse a JSON string into an object, you are at the mercy of the structure of that object. What do you mean by that? The cost of GC in all modern languages, is based around the number of reachable objects that you have. But if you have a very complex JSON object, then as long as that has a reference, all of that object graph has a reference and now you have to do far more work.

So with RavenDB a document is represented as a pointer to a file and we can just go directly into that. We can then traverse that document using pointer automatic to find the exact value that we want, and then send that over the network. That is a difference of 1,800 times faster than the other alternative. The numbers are utterly insane, but it turns out that this is not sufficient, because when you start to control your own memory, you start doing object pools and native memory and manage that yourself. Then you have all of this nice, fast things that you have, but you'd also remove from the purview of the GC, so much thing that it used to do. So now GC has a far smaller managed heap to work through and you held that.

But this is funny, because this is where it start to get really interesting. I don't have to do that level of work each and every time, I can absolutely say, "You know what? This is a debug endpoint, it happens once in two hours, if someone is feeling generous. I can just write it in the fastest, quickest, generates some code, generate some memory, I don't care." Which means that my velocity for building things is far different, but there is also another thing here.

In C++, in Rust, in Zig, any of the native languages, what happened if you messed up and you lost the reference to an object? Well, you have a memory leak, enjoy finding that. Here's what happened with the way we did things. We have native memory and if we lose a reference to that, the GC will come and clean up after me, because I have a managed object that hold handle to this native memory. If I lost access to that, the GC would collect that, it would execute the finalizer. In debug I would get this big notice, "Hey, something happened, you missed something." I would go and fix that. But in production, it means that I have someone who is making sure that I'm still up and running, even if something happened.

Wes Reisz: So you like C# or you chose C#, because it gives you a managed platform, a managed framework that lets you operate both at a lower system level and still operate quickly for times when you don't need that lower-level programming, to be able to improve velocity by working just at a higher level. So you can operate at two levels, plus get a managed framework.

Oren Eini: There is actually another interesting scenario that came up here. So the interesting thing about it is that we started very early and it was a rough journey, but along the way, something really interesting happened in the community, because all of the things that we had to implement on our own direct memory management, things like that. Suddenly they're in the framework, we have span and memory right now and there is a far greater awareness in the community, in the code base in general for the hidden codes that we have. For example, one of the things that we had to implement on our own was a StreamReader. Now there is a StreamReader in the .NET framework, but I cannot use that. I cannot use that, because whenever you initiate, you create a new StreamReader, it allocate two buffers. One that is 4KB in size and one that is 2KB in size. Why? Because that's the internal buffers that it needs to map between pages and characters.

Well, that sucks for me, if I have to create a lot of, I had to create my own that avoided that. Now this happens and the fact that .NET code became open source and there is active encouragement of the community around that, meant that a lot of the minor irritations got removed. If you look at the release notes, I think since 3.2.1 or something like that, .NET, you can see that there is a huge number of community contributors. Each one of them removed this smaller, minor annoying, here is an allocation, here is slightly better way of doing those sort of things and it turns out that this adds up a lot. We had a release, but we just updated the .NET version that we're running, performance cost drop by 20%. So you can see that the isolated running time was at 30% CPU most of the time and then it dropped to 10% CPU, something like that.

What’s next for RavenDB? [30:47]

Wes Reisz: All right. Oren, I need to start to wrap. That was an awesome conversation with you. I want to ask about what's next. I know that sharding is going to be re-added, it was removed a while back and it's being added again. So tell me about sharding and why it was removed in the first place?

Oren Eini: So Raven before did sharding around 2011. Between 2015 and 2018 we had this big rewrite, and sharding didn't make the cut, or to be more exact, we explicitly removed sharding from it. Now sharding is a quite complicated topic and because it became niche, we decided not to try to support it, but I want to explain something about how this works. Sharding supported we had in 2011 was from the client perspective. So you go and define the sharding configuration we have on the client, and then the client would know how to write request and things like that. That works and that's great.

But what ended up happening over time, is that we realize that we want to have as little configuration as possible on the client-side. The reason for that is, how do you support operations? I want to be able to modify things and I don't want to have to go and redeploy call to all of my clients. I want to be able to go to the database engine and say, change and have it applied. So that was a real issue. So what we decided to do, we decided we want to implement a sharding on the server-side and this is actually what we are busy doing for the 6.0 release of RavenDB, which we hope to be in mid Q3 of this year.

Wes Reisz: All right. Oren, well, thank you for the conversation today. Thank you for joining us and I look forward to chatting with you again in the future.