When building a distributed system, we have to consider many aspects of the network. This has led to many tools to help software developers improve performance, optimize requests, or increase observability. Service meshes, sidecars, eBPF, layer three, layer four, layer seven, it can all be a bit overwhelming. In this podcast, Jim Barton explains some of the fundamentals of modern service meshes, and provides an overview of Istio Ambient Mesh and the benefits it will provide in the future.

Key Takeaways

- Ideally, a service mesh should be transparent, with developers needing to know as little as possible about the mesh.

- The three pillars of service mesh are connect, secure, and observe.

- eBPF is adding capabilities, but in an evolutionary, not revolutionary way.

- The three goals of Istio Ambient Mesh are to reduce the cost of ownership, reduce the operational impact, and increase performance, especially at scale.

- By leveraging shared infrastructure, Ambient Mesh can reduce the number of sidecars needed, and also decrease the latency of some traffic.

Subscribe on:

Transcript

Introduction [00:01]

Thomas Betts: Hi everyone. Today's episode features a speaker from our QCon International Software Development Conferences. The next QCon Plus is online from November 30th to December 8th. At QCon, you'll find practical inspiration and best practices on how to implement emerging software trends directly from senior software developers at early adopter companies to help you adopt the right patterns and practices. I'll be hosting the modern APIs track so I hope to see you online. Learn more at qconferences.com.

When building a distributed system, we have to consider many aspects in the network. This has led to many tools to help software developers improve performance, optimize requests, or increase observability. Service meshes, sidecars, eBPF, layer three, layer four, layer seven, it can all be a bit overwhelming. Luckily, today I am at QCon San Francisco and I'm talking with Jim Barton, who's presenting a talk called Sidecars, eBPF and the Future of Service Mesh.

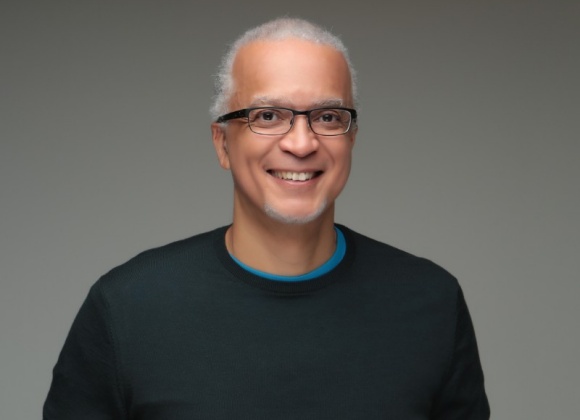

Jim Barton is a field engineer for Solo.io whose enterprise software career spans 30 years. He's enjoyed roles as a project engineer, sales and consulting engineer, product development manager, and executive leader of Techstars. Prior to Solo, he spent a decade architecting, building, and operating systems based on enterprise open source technologies at the likes of Red Hat, Amazon, and Zappos.

And I'm Thomas Betts, cohost of the InfoQ Podcast, lead editor for architecture and design at InfoQ, and an application architect at Blackbaud. Jim, welcome to the InfoQ Podcast.

Jim Barton: Thank you.

Fundamentals of service mesh [01:17]

Thomas Betts: Let's start with setting the context. Your talk's about the future of service mesh, but before we get to the future, can you bring us up to the present state of service mesh and what are we dealing with?

Jim Barton: Sure. So service mesh technology has been around for a few years now. We've gained a lot of experience over that time and experimented with some different architectures, and are really getting to a point now where I think we can make some intelligent observations based on the basic experience we have and how to take that forward.

Thomas Betts: How common are they in the industry? Are everybody using them? Is it just a few companies out there? What's the adoption like?

Jim Barton: When I started at Solo a few years ago, it was definitely in the early adopter phase, and I think now what we're seeing is we're beginning to get more into, I'm going to say, mid adopters. It's not strictly the avant garde who are willing to cut themselves and bleed all over their systems, but it's beginning to be more mainstream.

The use of sidecars in service mesh [02:08]

Thomas Betts: So sidecars was another subheading on your talk. Where are they at? Are they just a concept like a container? It's just where you run the code next to your application. And are there common products and packages that are intended for deployment as a sidecar?

Jim Barton: If you survey the history of service mesh technology, you'll see that sidecars is a feature of just about all of the architectures that are out there today. Basically, what that means is we have certain crosscutting concerns that we'd like the application to be able to carry out. For example, very commonly security, being able to secure all intra-service mTLS communication or secure all communication using mTLS. And so sidecars are a very natural way to do that because we don't want each application to have to do the heavy lifting associated with building those capabilities in. So if we can factor that capability out into a sidecar, it gives us the ability to remove that undifferentiated heavy lifting from the application developers and externalize that into infrastructure. So sidecars are probably the most common way that's been implemented since the "dawn of service mesh technology" a few years ago.

Thomas Betts: They really go hand in hand. Most of the service meshes have the sidecar, and that's just part of what you're going to see and part of your architecture?

Jim Barton: Absolutely. I'd say if you survey the landscape, the default architecture is the sidecar injection based architecture.

What do application developers need to know about service mesh? [03:26]

Thomas Betts: I've had a few roles in my career, but network engineer was never one of them. So I know that layer seven is the application layer and layer one is the wire, but I have to be honest, I would've to look it up on Google, whatever is in between. You talked about the whole point of the service mesh is to handle the things that the engineers and the application developers don't have to deal with. If they have the service mesh in place, what do they need to know versus what do they not need to know?

Jim Barton: And that's part of the focus of this talk is beginning to change the equation of what they need to know versus what they don't need to know. Ideally, we want the service mesh technology to be as transparent to application developers as possible. Of course, that's an objective that we aspire to but we may never 100% reach, but it's definitely something where we're moving strongly in that direction.

Thomas Betts: And the other part of my intro with the layers is where does all this technology operate? Where does the service mesh and the sidecar fit in? Where does eBPF fit into this? And does the fact that they operate at different levels affect what the engineers need to know?

Jim Barton: Wow, there's a lot to unpack in that question there. So I would say transparency, as I said before, is an ultimate goal here. We want to get to the point where application developers know as little as possible about the details of the mesh. Certainly, if you look at the way that developers would have solved these kinds of requirements five years ago, there would've been an awful lot of work required to integrate the various components. Let's take mTLS as an example to integrate the components into each individual application to make that happen, so there was a very high degree of developer knowledge of this infrastructure piece. And then, as we've begun to separate out the cross-cutting concerns from the application workloads themselves, we've been able to, over time, decrease the amount of knowledge that developers have to have about each one of those layers. There's still a fair amount, and that's one of the things that we're addressing in this talk and in the service mesh community in general is getting to a point where the networking components, whether it's layer four or layer seven, will be isolated more distinctly from the services themselves.

mTLS is the most common starting point for service mesh adoption [05:32]

Thomas Betts: You mentioned mTLS a few times. What are some other common use cases you're seeing?

Jim Barton: Sure, yeah. I mentioned mTLS just because we see that as probably the most common one that people come to a service mesh with. You look at regulated industries like financial services and telco, you look at government agencies with the Biden administration's mandate for Zero Trust architectures, and mTLS security, and so forth. So we see people coming to us with that requirement an awful lot but yeah, certainly there are a lot of others as well.

The three pillars of service mesh are connect, secure, and observe, and so we typically see things falling into one of those three buckets. So security is a central pillar and, as I said before, is probably the most common initial use case that users come to us with.

But also just think about the challenges of observability in a container orchestration framework like a Kubernetes. You have hundreds of applications, you have thousands of individual deployments that are out there, something goes wrong. How do you understand what's happening in that maze of applications and interconnections? Well, in the old world of monolithic software, it wasn't that difficult to do. You attach a debugger and you can see what's happening inside the application stack. But every debugging problem becomes a murder mystery that we have to figure out who done it, and where they were, and why. It just adds completely new sets of dimensions to the problems where you're throwing networking into the mix at potentially every hop along the way. So I'd say observability is also a very common use case that people come to us with, with respect to service mesh technology. What's going on? How can I see it? How can I understand that? And so service meshes are all about the business of producing that information in a form that it can be analyzed and acted upon.

Also, from a connectivity standpoint, so the third pillar, connect, secure, observe, from a connectivity standpoint, there's all kinds of complexities that can be managed in the mesh that in the past, certainly when my career started, you would handle those things in the application layer themselves. Things like application timeouts, failovers, fault injection for testing, and so forth. Even say at the edge of the application network, being able to handle more, let's say, advanced protocols, maybe things like gRPC, or OpenAPI, or GraphQL, or SOAP, those are all pretty challenging connectivity problems that can be addressed within service mesh.

Service mesh and observability [08:01]

Thomas Betts: So going back to the observability, since this is something that's at the network layer, is it just observability of the network or are we able to listen for things that are relevant to the application's behavior? Is that still inside the app code to say I need this type of observability, this method was called, or can you detect all that?

Jim Barton : With the underlying infrastructure that's used within the service mesh, just particularly if you look at things like Envoy Proxy, there are a whole lot of network and even some application level metrics that are produced as well. And so there's a lot of that information that you really do get for free just out of the mesh itself. But of course, there's always a place for individual applications publishing their own process-specific, application-specific metrics, and including that in the mix of things that you analyze.

Thomas Betts: And is that where things like OpenTelemetry, that you'd want to have all that stuff linked together so that you can say, "This network request, I can see these stats from service mesh and my trace gets called in with this application."?

Jim Barton: Absolutely. Those kinds of standards are really critical, I think, for taking what can be a raft of raw data and assembling that into something that's actually actionable.

The role of eBPF in service mesh [09:01]

Thomas Betts: So the observability, I think, is where eBPF, at least as I understand it, has really come into play, is that we're able to now listen at the wire effectively and say, "I want to know what's going on." What exactly does that mean? Does it eliminate the need for the sidecar? Does it augment it? And does it solve a different problem?

Jim Barton: eBPF has gotten a ton of hype recently in the enterprise IT space, and I think a lot of that is well deserved. If you look at open source projects like Cilium at the CNI networking layer, it really does add a ton of value and a lot of efficiency, additional security capabilities, observability capabilities, that sort of thing. I think when we think about that from a service mesh standpoint, certainly there's added value there, but I don't think, at least from a service mesh standpoint, most of what we see is eBPF not being so much a revolution, but more of a evolution and an improvement in things that we already do.

Just to give you an example, things like short circuiting certain network connections. Whereas before, without eBPF, I might have to traverse call going from A to B, I might have to traverse a stack, go out over the network, traverse another layer seven stack, and then send that to the application. With eBPF, there are certain cases where I can short circuit some of that, particularly if it's intra-node communication, and can actually make that quite a bit more efficient. So we definitely see enhancements from a networking standpoint as well as an observability and a security standpoint.

Roles of developers and operations [10:29]

Thomas Betts: When it comes to implementing the eBPF or a service mesh in general, is that really something that's just for the infrastructure and ops teams to handle? Developers need to be involved in that? Is that part of the devops overlapping term or is there a good separation of what you need to know and what you should be capable of doing?

Jim Barton: That's the great insight and a great observation. I think certainly our goal is to make the infrastructure as transparent as possible to the application. We don't want to require developers, let's say an application developer who's using service mesh infrastructure to be required to understand the details of eBPF. eBPF is a pretty low level set of capabilities. You're actually loading programs into the kernel and operating those in a sandbox within the kernel. So there's a lot of gory details there that a typical application owner, developer would rather not have to be exposed to, and so one of our goals is to provide the goodness that that kind of infrastructure can deliver, but without surfacing it all the way up to the application level.

Thomas Betts: So are the ops teams able to then just implement service mesh without having to talk to the dev teams at all, or does it change the design decisions of the application to say because we have the service mesh in place and we know that it can handle these things, I can now reduce some of the code that I need to write, but they need to work hand in hand to make those decisions?

Jim Barton: Definitely ops teams and app dev teams, they obviously still need to communicate. I see a lot of different platform teams, ops teams in our business and I think the ones that are the most effective, it's a little bit like an official at a sports event, at a basketball game, they're most effective when you're not aware of their presence. And so I think a good platform team operates in a similar way, they want to provide the app dev teams the tools they need to do what they do as efficiently as possible within the context of the values of the organization that they represent, but the good ones don't want to get in the way. They want to make the app dev teams be as effective, and efficient, and as free flowing as possible without interfering.

Thomas Betts: Building on that, how does this affect the code that we write, if at all?

Jim Barton: There are some cases today where occasionally the service mesh can impact what you do in the application itself, but those are the kinds of use cases that the community is moving as aggressively as it can to root those out, to make those unnecessary anymore. And I think with a lot of the advances that are coming with the ambient mesh architecture that we're going to talk about a fair amount in the talk tomorrow, that plays a big role in removing some of those cases where there does have to be a greater degree of infrastructure awareness on the application side.

Thomas Betts: Yeah, I think that's always one of those trade offs that back when you were just doing a monolith and there was probably small development team, you had to know everything. When I started out, I had to know how to build the server, and write the code, and support it. And as you got to larger companies, larger projects, bigger applications, distributed applications, you started handing off responsibilities, but it also changed your architectural decisions sometimes, that you got to microservices because you needed independent teams to work independently. This is a platform service. You don't have a service mesh for just one node of your Kubernetes cluster, this seems to be something that has to solve everyone's problems. So who gets involved in that? Is it application architects? Is it developers on each of the teams to decide here's how our service mesh path is going to go forward?

Jim Barton: So how do we separate responsibilities across the implementation of a service mesh? I would definitely say in the most effective teams that I see, the platform team frequently takes the lead. They're often the ones who are involved earliest on because they're the ones who have to actually lay down the bits, and set down the processes and the infrastructure that are going to allow the development teams to be effective in their jobs. That being said, there's clearly a case where the application teams need to start onboarding the mesh, and so the goal is to make that process as easy as possible and the operational process there as easy as possible. There definitely needs to be a healthy communication channel between the two, but I would say, from my experience at least, if the platform team does its job well, it should be a little bit like unofficial at a basketball game that's being well officiated, they shouldn't be top level of my consciousness every day as an app developer.

Who makes decisions regarding service mesh configuration? [14:39]

Thomas Betts: I think the good deployment situations I've run into personally have been I just write my code, check it in, the build runs, it deploys, and look, there's something running over there, and there's a bunch of little steps in the build pipeline and the release pipeline that I don't need to understand. And I know that I can call out to another service because I know how to write my code to call their services. How does that little piece change? Because I have my microservices and I know I have all these other dependencies. Is that another one of those who gets to decide how those names and basically the DNS problem, the DNS is always the problem, that we're offloading the DNS problem to the service mesh in some ways to embrace that third pillar of connectivity. Where does that come into play? Is it just another decision that has to be made by the infrastructure team?

Jim Barton: I would say a lot of those decisions, at least in my experience, it can vary from team to team where those kinds of decisions get made, but definitely there are abstractions in the service mesh world that allow you to specify, "Hey, here is a virtual destination that represents a target service that I want to be able to invoke." From an application client standpoint, it's just a name. I've used an API, I've laid down some bits that basically abstracts out all that complexity. Here's a name I can invoke, very easy, very straightforward way to invoke a service. Now, behind the scenes of that virtual destination, there may be all kinds of complexity. I may have multiple instances that are active at any point in time within my cluster, I may have another cluster that gets failed over to. If cluster one fails, I may fail over to another cluster that has that same service operational. In that case, there's a ton of complexity behind the scenes that the service mesh ideally will hide from the application developer client. At least that's the place that the people I work with want to get to.

OpenAPI and gRPC with service mesh [16:22]

Thomas Betts: And then I want to flip this around. So I deal with OpenAPI Swagger specs all the time, and that's how I know how to call that other service. We've talked about how my code should be unaware of the service mesh underneath. Does the service mesh benefit if it knows anything about how my API is shaped? And is there a place for that?

Jim Barton: I would say ideally, not really. There are definitely a variety of API contract standards out there that have different levels of support, depending on what service mesh platform you're using. OpenAPI, to take probably the most common example in today's world, is generally pretty well supported. I certainly know that certainly in our context with Istio, that's a very common pattern. And so there are others. Some of the more emerging standards, for example GraphQL, I think the support for that is less mature because it's a newer technology and it's more complex. It's more about, whereas OpenAPI tends to take a service or a set of services and provide an interface on top of that, GraphQL, when you get to a large-scale deployment of that, potentially provides a single graph interface that can span multiple suites of applications that are deployed all over the place. So there's definitely an additional level of complexity as we move to a standard like GraphQL that's less well understood than say something that's been around for a while, like OpenAPI.

Thomas Betts: The third one that's usually thrown around with those two is gRPC. And so that's where gRPC is relying on a little bit of different, it's HTP2, it's got a little bit more network requirements. And so just saying that you're going to use gRPC already implies that you have some of that knowledge of the network into your application. You're not just relying on it's HTTP over Port 80 or 443, everything's different underneath that. Does that change again or is that still in the wheelhouse of service mesh just handles that, it's fine, no big deal?

Jim Barton: I see gRPC used a lot internally within service meshes. I don't see it used so much from an external facing standpoint, at least in the circles where I travel. So you're absolutely correct, it does imply some underlying network choices with gRPC. I'd say because it is a newer, perhaps slightly less common standard than OpenAPI in practice, there's probably not quite as pervasive support for it in the service mesh community, but certainly in a lot of cases it's supported well and doesn't really change the equation, from my standpoint, vis-a-vis something like OpenAPI.

Sustainability and maintainability [18:49]

Thomas Betts: So with all of the service mesh technology, does this help application long term sustainability and maintainability? Having good separation of concerns is always a key factor. This seems like it's a good way to say, "I can be separated from the network because I don't have to think about connectivity and retries because that's no longer my application's problem." Is that something you're seeing in the industry?

Jim Barton: Absolutely. I'd say being able to externalize those kinds of concerns from the application itself is really part and parcel of what service mesh brings to the table, specifically the connect pillar of service mesh value. So yeah, absolutely. Being able to externalize those kinds of concerns into a declarative policy, being able to express that in YAML, store it in a repo, manage it with a GitOps technology like an Argo or a Flux, and be able to ensure that policy is consistently applied throughout a mesh, there's a ton of value there.

Service mesh infrastructure-as-code [19:43]

Thomas Betts: Your last point there about having the consistency, that seems to be very important. Microservices architecture, one of the jokes but it's true, is that you went from having one problem to having this distributed problem, and your murder mystery is correct. You don't even know that there is another house that the body could be buried in. So is this a place where you can see that service mesh comes in because it is here's how the service mesh is implemented, all of our services take care of these things, we don't have to worry about them getting out of sync, and that consistency is really important to have a good microservices architecture?

Jim Barton: Yeah, let me tell you a story. I spent many years at Red Hat and we did some longterm consulting engagements with a number of clients. One of them was a large public sector client whose name you would recognize, and we were working with them on a longterm, high touch, multi-week engagement. And we walked in one Monday morning and something happened, I still don't know what it is, but our infrastructure was gone. Basically, it was completely hosed. And so in the old world, we would've been in a world of hurt. We'd had to go debug, and so we start going down this path of all right, what happened? That's our first instinct as engineers, we want to know what happened, what was the problem?

And we investigated that for a while and finally someone made the brilliant observation, "We don't necessarily have to care about this. We've built this project right, we have all of the proper devops principles in place here, all we really have to do is go press this button. We can regenerate the infrastructure and by lunchtime we can be back on our development path again." And so that was the day I became a believer, a true dyed-in-the-wool believer in devops technology, and it was just that it was the ability to produce that infrastructure consistently based on a specification, and it's just invaluable.

Thomas Betts: I've seen that personally from one service, one server, one VM, but having it across every node in your network, across your Kubernetes, all of that being defined, and infrastructure as code is so important for all those reasons. That applies as well to the service mesh because you're saying the service mesh is still managed in YAML stored in repo.

Jim Barton: It's basically the same principles you would apply to a single service and simply adopting them at scale, pushing them out at scale.

Ambient Mesh [21:53]

Thomas Betts: We spent a lot of time talking about the present. I do want to take the last few minutes and talk about what's the future? So what is coming? You mentioned ambient mesh architecture. Let's just start with that. What's an ambient mesh?

Jim Barton: Ambient mesh is the result of a process that Google and Solo have collaborated on over the past year, and it's something we are really, really excited about at Solo. And so we identified a number of issues, again, from our experience working with clients who are actually implementing service mesh, things that we would like to improve, things that we would like to do better to make it more efficient, more repeatable, to make upgrades easier, just a whole laundry list of things. And so over the past year, we've been collaborating on this, both Google and Solo are leaders in the Istio community, and just released in the past month, the first down payment, I'm going to say, an experimental version of Istio ambient mesh into the Istio community. And so what that does is it basically takes the old sidecar model that's the traditional service mesh model, and gives you the option of replacing that with a newer data plane architecture that's based on a set of shared infrastructure resources as opposed to resources that are actually attached to the application workloads, which is how sidecar operates.

Benefits of shared service mesh infrastructure [23:12]

Thomas Betts: What are the benefits of having that shared infrastructure? Is it you only need to deploy 1 thing instead of 10 copies of the same thing?

Jim Barton: The benefits that we see are threefold, really. One is just from a cost of ownership standpoint, replacing a set of infrastructure that is attached to each workload, and being able to factor that out into shared infrastructure. There are a lot of efficiencies there. There's a blog post out there that we produce based on some hopefully reasonable assumptions that estimate reduction in cost and so forth on the order of 75%. Obviously, your mileage varies depending on how you have things deployed, but there's some significant savings from just an infrastructure resource consumption standpoint. So that's one, is just cost of ownership.

A second benefit is just operational impact. And so we see, particularly for customers who do service mesh at scale, let's pick a common one, let's say that there's an Envoy CVE at layer seven, and that requires you to upgrade all of the proxies across your service mesh. Well, that can become a fairly challenging task.

If you're operating at scale, you have to schedule some kind of downtime that can accommodate this rolling update across your entire service network so that you can go and then apply those envoy changes incrementally. That's a fairly costly, fairly disruptive process, again, when you're operating at scale. And so by separating that functionality out from the application sidecar into shared infrastructure, it makes that process a whole lot easier. Now, the applications don't have to be recycled when you're doing an upgrade like that, it's just a matter of replacing the infrastructure that needs to be replaced and the applications are none wiser, they just continue to operate. It goes back to the transparency we were talking about before. We want the mesh to be as transparent as possible to the applications who are living in it.

We talked about cost of ownership, we talked about operational improvements, and third, we should talk about performance. One of the things we see when operating service meshes at scale is that all of these sidecar proxies, they add latency into the equation. And the larger your service network is and the more complex the service interactions are, the more latency that gets added into the process with each service call. And so part of that is because each one of those sidecars is a full up layer seven Envoy proxy, so it takes a couple of milliseconds to traverse the TCP stack typically. And if you're doing that both at the sending end and the receiving end on every service call, you can do the math on how that latency adds up.

And so by factoring things out with the new ambient mesh architecture, we see, for a lot of cases, we can cut those numbers pretty dramatically. Let's just take an mTLS use case because, again, that's a very common one to start with. We can reduce that overhead from being two layer seven Envoy traversals at two milliseconds each, we can reduce that to being two layer four traversals of a secure proxy tunnel at a half millisecond each. And so you do the math on a high scale service and it cuts the additional latency, it improves performance numbers quite a bit.

Thomas Betts: And obviously, that's what you're getting into, it makes sense at scale. This isn't a concern that you have with a small cluster, when you're talking about the two milliseconds to half millisecond makes a difference when you're talking about tens of thousands of them.

Jim Barton: The customers we work with, I mean we work with a variety of customers. We work with small customers, we work with large customers. Obviously, the larger enterprises are the more demanding ones, and they're the ones who really care about these sorts of issues, the issues of operating at scale. And so we think what we're doing is by partnering with those people, we're driving those issues out as early in this process as possible so that when people come along with more modest requirements, it's just they're non-issues for them.

Future enhancements for GraphQL and service mesh [27:06]

Thomas Betts: Was there anything else in the future of service mesh besides the ambient mesh?

Jim Barton: First of all, the innovation within the ambient mesh itself is not done. Solo and Google wanted to get out there as quickly as possible something that people could get their hands on, and put it through its paces, and get us some feedback on that.

But we already know there are innovations within that space that need to continue. For example, we discussed eBPF a bit earlier, optimizing some of the particular new proxies that are in this new architecture, being able to optimize those to use things like eBPF, as opposed to just using a standard Envoy proxy implementation, to really drive the performance of those components just as hard as we possibly can. So there are definitely some, I'm going to say, incremental changes, not architectural changes, but incremental changes, that I think are going to drive the performance of ambient mesh.

So that's certainly one thing, but we also see a lot of other innovations that are coming along the data plane of the service mesh as well. One that we are really excited about at Solo has to do with GraphQL technology. GraphQL to date has typically required a separate server component that's independent of the rest of API gateway technology that you might have deployed in your enterprise. And we think fundamentally that's a curious architecture. I have an API gateway today, it knows how to serve up OpenAPI, it knows how to serve SOAP, it knows how to serve gRPC maybe. Why is GraphQL this separate unicorn that needs its own separate server infrastructure that has to be managed? Two API gateway moving parts instead of one.

And so that's something that we've been working on a lot at Solo and have GraphQL solutions out there that allow you to absorb the specialized GraphQL server capabilities into just another Envoy filter on your API gateway as opposed to have it being this separate unicorn that has to be managed separately. That's something that we see again in the data plane of the service mesh that's going to become even more important as GraphQL, again, my crystal ball, I don't know if I brought it with me today, but if I look into my crystal ball, GraphQL's adoption curve looks to be moving up pretty quickly. And we're excited about that, and we think there are some opportunities for really improving on that from an operational and an infrastructure standpoint.

Thomas Betts: So the interesting question about that is most of the cases I hear about GraphQL, it's that front end serving layer. I want to have my mobile app be as responsive as possible, it's going to make one call to the GraphQL server to do all the things and then the data's handled. That's why it's got that funny architecture you described. But I see service mesh is mostly on the backend between the servers. Is this a service mesh at the edge then?

Jim Barton: Service mesh has a number of components. Definitely, with the ambient mesh component that we've been talking about, we're talking, to a large extent, about the internal operations of the service mesh, but an important component of service mesh and, in fact, one of the places where we see the industry converging is in the convergence of traditional standalone API gateway technology and service mesh technology. For example, let's take Istio as an example in the service mesh space, there's always been a modest gateway component, Istio ingress gateway, that ships with Istio.

It's not that full featured, it's not something that typically, at least on its own as it comes out of the box, is something that people would use to solve complex API gateway sorts of use cases. And so Solo has actually been in this space for a while and has actually brought a lot of its standalone API gateway expertise into the service mesh. In fact, we just announced recently a product offering that's part of the overall service mesh platform that's called Gloo gateway that addresses these very issues, being able to add a sophisticated, full-featured API gateway layer at the north south boundary of the service mesh.

Thomas Betts: Well, I think that's about it for our time. I want to thank Jim Barton for joining me today and I hope you'll join us again for another episode with the InfoQ podcast.

References

- Istio Ambient Mesh

- Blog post: Introducing Ambient Mesh