Dr Alan D. Thompson, the man behind lifearchitect.ai, sees the current AI trajectory as a shift more profound than the discovery of fire, or the Internet. His Devoxx keynote presented the state of the AI industry, including an introduction to Google’s Transformer architecture, which he claimed was a true transformer of the landscape. It gave rise to new AI models, which can conceptualise images, books from scratch, and much more.

The Internet generated huge amounts of money in the 1997-2021 interval. However, that amount of money is dwarfed just by the amounts estimated to be generated by AI(a scale of 9x) in the 2021-2030 interval.

After a long "AI-Winter", the field seems to have encountered a true renaissance, mostly ignited by the Generative Pre-trained Transformer 3 (GPT3) architecture. This architecture was discovered almost accidentally while some Google researchers tried to solve the problem of translating from gendered languages like French or German to English. The transformer does what its name implies: transforms an input text into an output, being able to respond to questions by "understanding" the whole context (the concept is referred to as self-attention). The actual "magic" is powered by a chained set of ninety-six decoder layers, each having its own 1.8 Billion parameters to make its computations. Unlike other approaches that looked at individual words, GPT3 analyzes the whole context.

More than natural language processing (NLP), transformer-based models had surprising results in the code generation space, enabling the developers to be twice as productive as before. As you might expect, big names in technology have their own trained models, like Meta’s AI InCoder (6.7 B parameters), Amazon’s CodeWhisperer (estimate 10B parameters) or even SalesForce’s CodeGen (16 B parameters). But the largest model by far is Google’s PALM, with a 540 Billion parameter count. Its capabilities were considered impressive by Dr Alan D. Thompson:

[Google PALM] it’s able to do some outrageous stuff [...]the skills that it has, are outside what it should be capable of

CodeGeeX, a model having the added benefit of being downloaded and trained on-premises, is a slightly larger model (13 B parameters) than Copilot’s Open AI Codex. Its capabilities can be tested online on huggingface.co, or directly in VS Studio Code, for which it provides an extension.

Even models with a smaller number of parameters can have impressive results if they are trained with the appropriate data. For example, Google’s internal code transformation tool was trained on their monorepo, consolidating their codebases written in various programming languages, including C++, Java and Go. This 0.5 B parameters model, small in the scheme of things, was appreciated by the developers that used it, and the measured productivity and efficiency increased as well.

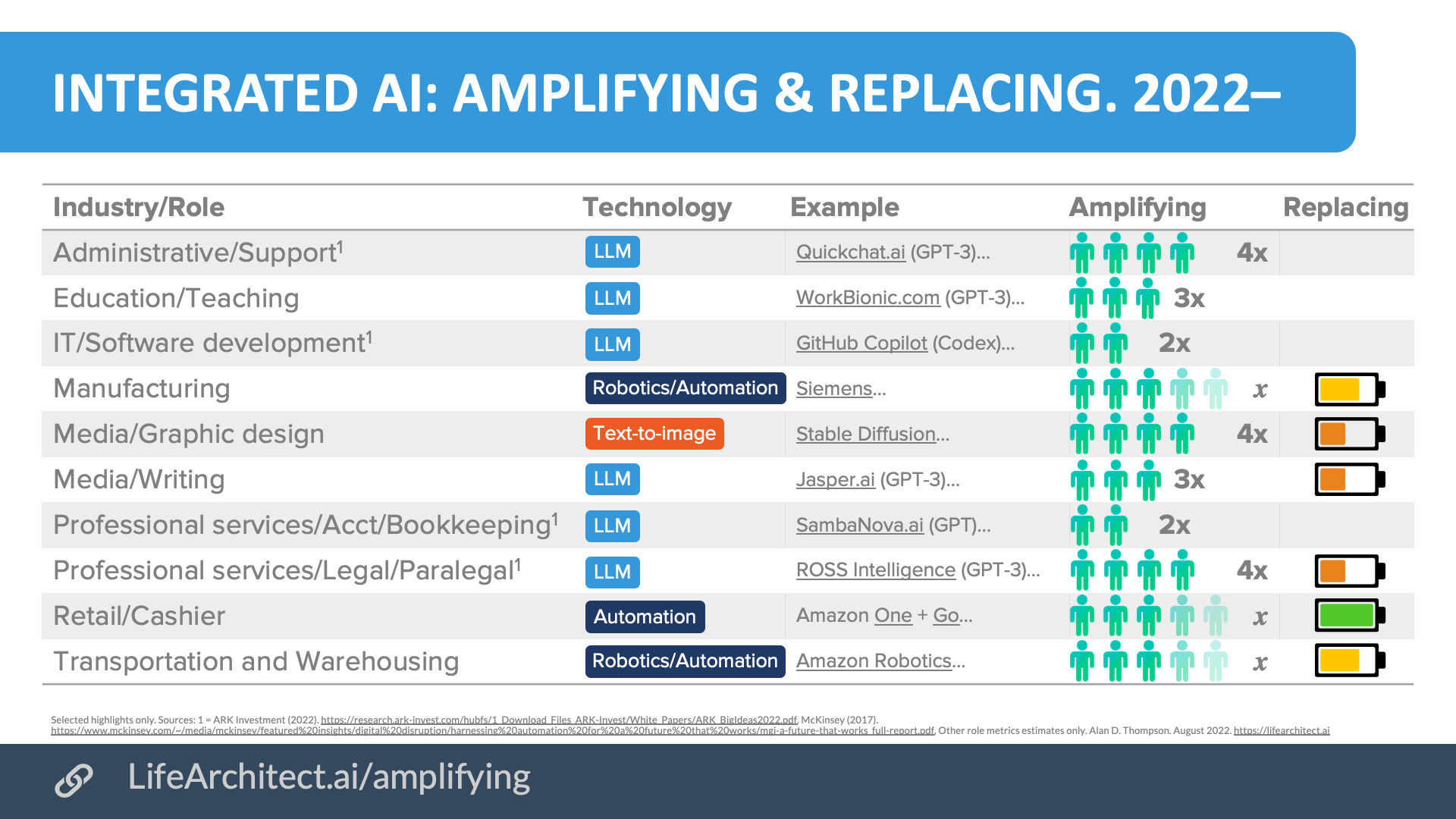

As the capabilities of these models are limited only by human imagination, multiple experiments have been run to try to find their boundaries. Besides the now classical chess-playing competitions, trivia problems, and math competitions, AI models were used even for writing books, composing music, and even conceptualising art. With impressive results, Dr Thompson claims that the new developments will enhance human abilities and, in some situations, even replace them.

Even if the outcomes are so positive, the way in which these ML systems work is still a mystery as observed in OpenAI’s InstructGPT paper from 4/Mar/2022:

...the [GPT-3] model is a big black box, we can’t infer its beliefs

A similar conclusion is being drawn by Stanford’s research group. Nevertheless, the interest is high: in 2022, many Fortune 500 companies are experimenting with AI. OpenAI reported that IBM, Cisco, Intel, Twitter, Salesforce, Disney, Microsoft, and even governments all played with the GPT3 model. So, we can conclude that AI is here to stay, its future development being hard to anticipate.