At its annual Inspire conference, Microsoft recently announced the public preview of Vector search in Azure Cognitive Search, a capability for building applications powered by large language models. It is a new capability for indexing, storing, and retrieving vector embeddings from a search index.

Microsoft’s Vector Search feature through Azure Cognitive Search uses machine learning to capture the meaning and context of unstructured data, including images and text, to make search faster.

Liam Cavanagh, a principal group product manager of Azure Cognitive Search, explains in a Tech Community blog post:

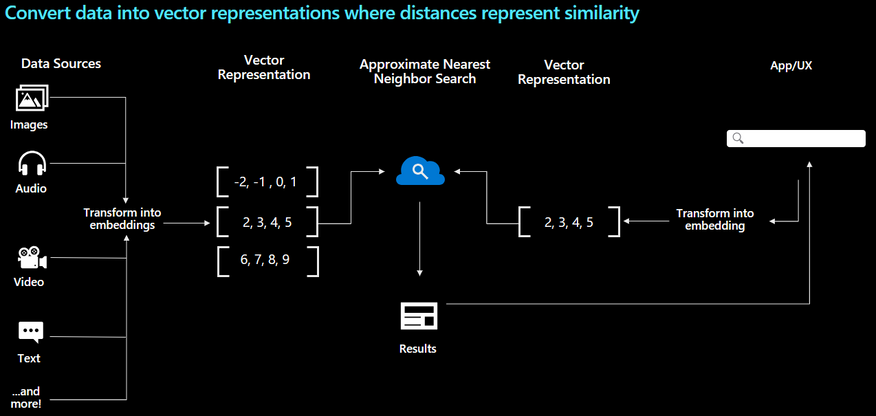

Vector search is a method of searching for information within various data types, including images, audio, text, video, and more. It determines search results based on the similarity of numerical representations of data, called vector embeddings. Unlike keyword matching, Vector search compares the vector representation of the query and content to find relevant results for users. Azure OpenAI Service text-embedding-ada-002 LLM is an example of a powerful embeddings model that can convert text into vectors to capture its semantic meaning.

Diagram of vector representations of data (Source: Tech Community blog post)

Users can leverage the feature for similarity search, multi-modal search, recommendations engines, or applications implementing the Retrieval Augmented Generation (RAG) architecture. The growing need to integrate Large Language Models (LLMs) with custom data drives the latter. For instance, users can retrieve relevant information using Vector search, analyze and understand the retrieved data, and generate intelligent responses or actions based on the LLM’s capabilities.

Distinguished engineer at Microsoft Pablo Castro explains in a LinkedIn post:

Vector search also plays an important role in Generative AI applications that use the retrieval-augmented generation (RAG) pattern. The quality of the retrieval system is critical to these apps’ ability to ground responses on specific data coming from a knowledge base. Not only Azure Cognitive Search can now be used as a pure vector database for these scenarios, but it can also be used for hybrid retrieval, delivering the best of vector and text search, and you can even throw-in a reranking step for even better quality by enabling it.

Since Vector Search is a part of Cognitive Services, it brings forth a range of additional functionalities, including faceted navigation and filters. Moreover, by utilizing Azure Cognitive Search's Indexer, users can draw data from various Azure data stores, such as Blob Storage, Azure SQL, and Cosmos DB, to enrich a unified, AI-powered application.

Some of the use cases, according to the company for the Vector Search integrated with Azure AI, are:

- Search-enabled, chat-based applications using the Azure OpenAI Service

- Conversion of images into vector representations using Azure AI Vision for accurate, relevant text-to-image and image-to-image search experiences

- Fast and accurately retrieve relevant information from large datasets to help automate processes and workflows

The technique of vectorization is gaining popularity in the field of search. It involves transforming words or images into numerical vectors, encoding their semantic significance, and facilitating mathematical processing. By representing data as vectors, machines can organize and comprehend information, swiftly identifying relationships between words located closely in the vector space and promptly retrieving them from vast databases containing millions of words.

Amazon and Google use the technique in their offerings. Google, for instance, in Vertex AI Matching Engine, and managed databases like Cloud SQL and AlloyDB. At the same time, Amazon brings it to OpenSearch. In addition, Microsoft has another offering leveraging vectorization with Azure Data Explorer (ADX).

Lastly, Vector Search in Azure Cognitive Services is currently available in all regions without cost. In addition, Vector search code samples are available in a GitHub repo.